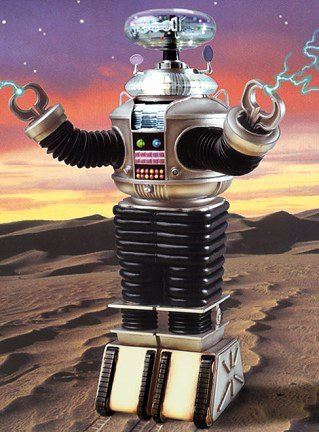

Trust me, Will Robinson...Danger, Danger!

Posted on 06/12/2006 6:18:24 PM PDT by annie laurie

IN 1981 Kenji Urada, a 37-year-old Japanese factory worker, climbed over a safety fence at a Kawasaki plant to carry out some maintenance work on a robot. In his haste, he failed to switch the robot off properly. Unable to sense him, the robot's powerful hydraulic arm kept on working and accidentally pushed the engineer into a grinding machine. His death made Urada the first recorded victim to die at the hands of a robot.

This gruesome industrial accident would not have happened in a world in which robot behaviour was governed by the Three Laws of Robotics drawn up by Isaac Asimov, a science-fiction writer. The laws appeared in “I, Robot”, a book of short stories published in 1950 that inspired a recent Hollywood film. But decades later the laws, designed to prevent robots from harming people either through action or inaction (see table), remain in the realm of fiction.

Indeed, despite the introduction of improved safety mechanisms, robots have claimed many more victims since 1981. Over the years people have been crushed, hit on the head, welded and even had molten aluminium poured over them by robots. Last year there were 77 robot-related accidents in Britain alone, according to the Health and Safety Executive.

With robots now poised to emerge from their industrial cages and to move into homes and workplaces, roboticists are concerned about the safety implications beyond the factory floor. To address these concerns, leading robot experts have come together to try to find ways to prevent robots from harming people. Inspired by the Pugwash Conferences—an international group of scientists, academics and activists founded in 1957 to campaign for the non-proliferation of nuclear weapons—the new group of robo-ethicists met earlier this year in Genoa, Italy, and announced their initial findings in March at the European Robotics Symposium in Palermo, Sicily.

“Security, safety and sex are the big concerns,” says Henrik Christensen, chairman of the European Robotics Network at the Swedish Royal Institute of Technology in Stockholm, and one of the organisers of the new robo-ethics group. Should robots that are strong enough or heavy enough to crush people be allowed into homes? Is “system malfunction” a justifiable defence for a robotic fighter plane that contravenes the Geneva Convention and mistakenly fires on innocent civilians? And should robotic sex dolls resembling children be legally allowed?

These questions may seem esoteric but in the next few years they will become increasingly relevant, says Dr Christensen. According to the United Nations Economic Commission for Europe's World Robotics Survey, in 2002 the number of domestic and service robots more than tripled, nearly outstripping their industrial counterparts. By the end of 2003 there were more than 600,000 robot vacuum cleaners and lawn mowers—a figure predicted to rise to more than 4m by the end of next year. Japanese industrial firms are racing to build humanoid robots to act as domestic helpers for the elderly, and South Korea has set a goal that 100% of households should have domestic robots by 2020. In light of all this, it is crucial that we start to think about safety and ethical guidelines now, says Dr Christensen. Stop right there

So what exactly is being done to protect us from these mechanical menaces? “Not enough,” says Blay Whitby, an artificial-intelligence expert at the University of Sussex in England. This is hardly surprising given that the field of “safety-critical computing” is barely a decade old, he says. But things are changing, and researchers are increasingly taking an interest in trying to make robots safer. One approach, which sounds simple enough, is try to program them to avoid contact with people altogether. But this is much harder than it sounds. Getting a robot to navigate across a cluttered room is difficult enough without having to take into account what its various limbs or appendages might bump into along the way.

Regulating the behaviour of robots is going to become more difficult in the future, since they will increasingly have self-learning mechanisms built into them, says Gianmarco Veruggio, a roboticist at the Institute of Intelligent Systems for Automation in Genoa, Italy. As a result, their behaviour will become impossible to predict fully, he says, since they will not be behaving in predefined ways but will learn new behaviour as they go.

Then there is the question of unpredictable failures. What happens if a robot's motors stop working, or it suffers a system failure just as it is performing heart surgery or handing you a cup of hot coffee? You can, of course, build in redundancy by adding backup systems, says Hirochika Inoue, a veteran roboticist at the University of Tokyo who is now an adviser to the Japan Society for the Promotion of Science. But this guarantees nothing, he says. “One hundred per cent safety is impossible through technology,” says Dr Inoue. This is because ultimately no matter how thorough you are, you cannot anticipate the unpredictable nature of human behaviour, he says. Or to put it another way, no matter how sophisticated your robot is at avoiding people, people might not always manage to avoid it, and could end up tripping over it and falling down the stairs. Legal problems

So where does this leave Asimov's Three Laws of Robotics? They were a narrative device, and were never actually meant to work in the real world, says Dr Whitby. Quite apart from the fact that the laws require the robot to have some form of human-like intelligence, which robots still lack, the laws themselves don't actually work very well. Indeed, Asimov repeatedly knocked them down in his robot stories, showing time and again how these seemingly watertight rules could produce unintended consequences.

In any case, says Dr Inoue, the laws really just encapsulate commonsense principles that are already applied to the design of most modern appliances, both domestic and industrial. Every toaster, lawn mower and mobile phone is designed to minimise the risk of causing injury—yet people still manage to electrocute themselves, lose fingers or fall out of windows in an effort to get a better signal. At the very least, robots must meet the rigorous safety standards that cover existing products. The question is whether new, robot-specific rules are needed—and, if so, what they should say.

“Making sure robots are safe will be critical,” says Colin Angle of iRobot, which has sold over 2m “Roomba” household-vacuuming robots. But he argues that his firm's robots are, in fact, much safer than some popular toys. “A radio-controlled car controlled by a six-year old is far more dangerous than a Roomba,” he says. If you tread on a Roomba, it will not cause you to slip over; instead, a rubber pad on its base grips the floor and prevents it from moving. “Existing regulations will address much of the challenge,” says Mr Angle. “I'm not yet convinced that robots are sufficiently different that they deserve special treatment.”

Robot safety is likely to surface in the civil courts as a matter of product liability. “When the first robot carpet-sweeper sucks up a baby, who will be to blame?” asks John Hallam, a professor at the University of Southern Denmark in Odense. If a robot is autonomous and capable of learning, can its designer be held responsible for all its actions? Today the answer to these questions is generally “yes”. But as robots grow in complexity it will become a lot less clear cut, he says.

“Right now, no insurance company is prepared to insure robots,” says Dr Inoue. But that will have to change, he says. Last month, Japan's ministry of trade and industry announced a set of safety guidelines for home and office robots. They will be required to have sensors to help them avoid collisions with humans; to be made from soft and light materials to minimise harm if a collision does occur; and to have an emergency shut-off button. This was largely prompted by a big robot exhibition held last summer, which made the authorities realise that there are safety implications when thousands of people are not just looking at robots, but mingling with them, says Dr Inoue.

However, the idea that general-purpose robots, capable of learning, will become widespread is wrong, suggests Mr Angle. It is more likely, he believes, that robots will be relatively dumb machines designed for particular tasks. Rather than a humanoid robot maid, “it's going to be a heterogeneous swarm of robots that will take care of the house,” he says.

Probably the area of robotics that is likely to prove most controversial is the development of robotic sex toys, says Dr Christensen. “People are going to be having sex with robots in the next five years,” he says. Initially these robots will be pretty basic, but that is unlikely to put people off, he says. “People are willing to have sex with inflatable dolls, so initially anything that moves will be an improvement.” To some this may all seem like harmless fun, but without any kind of regulation it seems only a matter of time before someone starts selling robotic sex dolls resembling children, says Dr Christensen. This is dangerous ground. Convicted paedophiles might argue that such robots could be used as a form of therapy, while others would object on the grounds that they would only serve to feed an extremely dangerous fantasy.

All of which raises another question. As well as posing physical danger, might robots also be dangerous to humans in less direct ways, by bringing out their worst aspects, from warfare to paedophilia? As Ron Arkin, a roboticist at the Georgia Institute of Technology in Atlanta, puts it: “If you kick a robotic dog, are you then more likely to kick a real one?” Roboticists can do their best to make robots safe—but they cannot reprogram the behaviour of their human masters.

Ping

|

Tagline being fixed.

No problem - just yell "klaatu barada nikto!" and they'll heel.

And really hot infiltrators!

Trust me, Will Robinson...Danger, Danger!

"However, the idea that general-purpose robots, capable of learning, will become widespread is wrong"

I believe that sometime in the future artificial intelligence will increase exponetially to where a robot would learn very quickly, even human mannerisms. Also there is nothing stopping robot manufacturers of using wireless to allow them to tap into vast resources on how to do something they've never done before. In essence I'm saying that the robot in "I, Robot" is a very real possibility.

File that statement with this one...

"Everything that can be invented has been invented." --Charles H. Duell, Commissioner, U.S. Office of Patents, 1899.

[[I believe that sometime in the future artificial intelligence will increase exponetially to where a robot would learn very quickly, even human mannerisms. Also there is nothing stopping robot manufacturers of using wireless to allow them to tap into vast resources on how to do something they've never done before. In essence I'm saying that the robot in "I, Robot" is a very real possibility.]]

I agree! My argument and conjecture has always been, that once machines become self-aware (just before or just after) their learning capabilities will become incredibly exponential in mere seconds. Their cognitive systems won't be limited by time and distraction as humans' are. Of course, I have no idea what type of resources or "motivation" this supposed Robot will have - if any. My best guess is something "Borg"-like in needs. However, the Robot will not have millions of years of developed survival instincts. I think it'll be easy to destroy or "unplug", if necessary.... sort of like a smart version of a Berkley college professor (without tenure).

as my old machining instructor was fond of saying:

you ALWAYS turn the machine off.

you ALWAYS flip the lock-out cover plate into place.

you ALWAYS insert the red safety pin/flag.

you NEVER trust anyone, and you NEVER assume a dad-blamed thing.

"My argument and conjecture has always been, that once machines become self-aware (just before or just after) their learning capabilities will become incredibly exponential in mere seconds."

Can you elaborate on this for me? I've done a lot of thinking on vastly improved AI in robots and slowly I have built an imaginary robot in my mind into a very sophisticated "machine" that essentially is a great servant to all of mankind. You're talking about a great leap forward that I have not thought of. Once I understand your thoughts on this I think I can move forward faster.

Hi JwhDenver

I'm sure you know WAYYY more about it than I do. I'm using creative thinking and imagination based on what I THINK I know about AI, upcoming.

I imagine a combo electro-chemical processing with virtually instantanous speed. Now, such an explosion of self-awareness, followed by a RELATIVELY godlike knowledge - requires a lot of obvious prerequisites by the designers. Such an exponential processing event could move in millions of defined, finite, segments (one purposeful segment of knowledge, the "Joshua" theory) - or be dynamic and broad (the "Lawnmower Man" theory).... or, result in a locking loop that crashes the whole matrix (brain, or system) down.

So, take for example, the super chem system I describe above - and suppose a billion logic processes were activated (i.e., like scientists examining all those lines of DNA code by hand, taking years). The chembot extrapolates the billion, or million logic query processes and (by PURE luck), becomes self-aware (to a degree the techs standing nearby wouldn't even realize). The system will or won't do any number of things for itself. This, then goes to my "Borg" theory... my best guess, the chembot would likely acquire information to one driven end or path. I doubt that the techs witnessing all of this will ever have a chance to learn what that path is (or was).

Notice I use a lot of science FICTION in my examples above - movies? I'm a Star Trek hack who just thinks about stuff like this.

PS - I'm in Denver, too! Best, Bill

ping

"I'm sure you know WAYYY more about it than I do."

LOL! No way, my idea probably got hatched when I was sitting on the can! Haha. I doing what you're doing:

"I'm using creative thinking and imagination based on what I THINK I know about AI, upcoming."

I get your idea and I think you're right if suddenly an explosion went off into a robot's mind you'd have possibly a complete override of any programming in it. The new logic could/would render it stupid and disregard it. Like you said in seconds you'd have a totally different robot which would establish it's own direction whether good or bad. BTW, I just ordered "Lawnmower Man" from Netflix, looks like a "thinking" movie. Thanks for the tip. What would the robot be in 1 day? Total unknown robot?

My approach was much more linear. My robot at first was simply a get drinks etc, relatively stupid robot. But as the company who made the robot kept upgrading the AI, I began to teach it how to interact with people and do many other things. All the while it also served as a walking computer and was able to give probabilities which came from already established computer programs via wireless. Then a new upgrade was installed and I gave it the name "Charlie" because it no longer acted like a stupid machine. It became smart enough to help figure out problems and work with humans to gain insight into wisdom. It learned how to use the vast information available to it and come up with various ideas that it would present either to me or other humans. Charlie became aware at some point during this time. As Charlie learned things he would store them in a "public domain" so other robots could access the learning and be able to do more and more things.

At one point robots were needed to explore some caves and so experienced mountain climbers were called in to teach some robots how to do it. On their first trip into a cave when they came to a cliff or whatever obstacle the mountain climbers would give them advice on how to get passed the obstacle. All this learning now became accessible in dealing with the next obstacle. It didn't take long for them to become very proficient at their job.

As the various robots became more knowledgeable the learning was linear, then slowly went to geometric and then parabolic because all information was stored in this "public domain" storage unit. If one learned something it became available to all. Since I had and others had taught Charlie he was the most advanced and set down the programming essentially as thinking servants to humans.

Charlie then used massive computers to improve his AI and spent a great deal of time programming various sectors of his brain to greatly expand his thinking capabilties. Each program was massive and when he finally turned them all on he was instantly completely overwhelmed with information overload. Way too many options. So he cut down each program to about 2 or 3% to alleviate this problem. As time went on he established a system to block worthless ideas before they ever came to mind. As time went on he turned his AI programs up a bit at a time. I haven't got to the point where he has them full on.

Years ago when I had a Commodore sitting on my desk I had some friends over and everyone of them worked with computers. One was in IT, another was proficient programmer, another had used them extensively in his work, and a couple of others who used them everyday. I told them one day computers would not have hard drives, it would all be ram. We were friends so they were easy on me in telling me I was FOS. 3 days ago I saw a major manufacturer came out with a laptop based on flash memory, no hard drives.

Denver A? I now live in Parker.

This is a true gas, 2 people using imagination projecting into a might be future without hindering ourselves with what is now.

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.