Skip to comments.

We Now Know How AI ‘Thinks’—and It’s Barely Thinking at All

Wall Street Journal ^

| 04/26/2025

| Story by Christopher Mims

Posted on 04/26/2025 5:45:57 PM PDT by Lazamataz

The big names in artificial intelligence—leaders at OpenAI, Anthropic, Google and others—still confidently predict that AI attaining human-level smarts is right around the corner. But the naysayers are growing in number and volume. AI, they say, just doesn’t think like us.

The work of these researchers suggests there’s something fundamentally limiting about the underlying architecture of today’s AI models. Today’s AIs are able to simulate intelligence by, in essence, learning an enormous number of rules of thumb, which they selectively apply to all the information they encounter.

This contrasts with the many ways that humans and even animals are able to reason about the world, and predict the future. We biological beings build “world models” of how things work, which include cause and effect.

Many AI engineers claim that their models, too, have built such world models inside their vast webs of artificial neurons, as evidenced by their ability to write fluent prose that indicates apparent reasoning. Recent advances in so-called “reasoning models” have further convinced some observers that ChatGPT and others have already reached human-level ability, known in the industry as AGI, for artificial general intelligence.

For much of their existence, ChatGPT and its rivals were mysterious black boxes.

There was no visibility into how they produced the results they did, because they were trained rather than programmed, and the vast number of parameters that comprised their artificial “brains” encoded information and logic in ways that were inscrutable to their creators. But researchers are developing new tools that allow them to look inside these models. The results leave many questioning the conclusion that they are anywhere close to AGI.

(Excerpt) Read more at msn.com ...

TOPICS:

KEYWORDS: ai; artificial; intelligence; llm

Navigation: use the links below to view more comments.

first previous 1-20 ... 41-60, 61-80, 81-100, 101-105 next last

To: Lazamataz; All

Thanks for *ping*. One of the most interesting threads I’ve read on this subject.

61

posted on

04/27/2025 1:58:05 AM PDT

by

no-to-illegals

(The enemy has US surrounded. May God have mercy on them. lol)

To: Bikkuri

After 8 years of (being required to be) working with offshore Indians, I’ve learned to understand all but the thickest accents.

62

posted on

04/27/2025 2:52:50 AM PDT

by

Lazamataz

(I'm so on fire that I feel the need to stop, drop, and roll!)

To: no-to-illegals

It’s a fascinating topic overall, and a great article in particular.

63

posted on

04/27/2025 2:53:36 AM PDT

by

Lazamataz

(I'm so on fire that I feel the need to stop, drop, and roll!)

To: Lazamataz

AI, they say, just doesn’t think like us. That gets a big 'NO SHIT SHERLOCK'... Like they didn't know this already?

64

posted on

04/27/2025 2:57:33 AM PDT

by

Bullish

(I've never seen such morons... Have you?)

To: Lazamataz

I don't know if you've seen this article, but I found it interesting in its conclusions about various AIs:

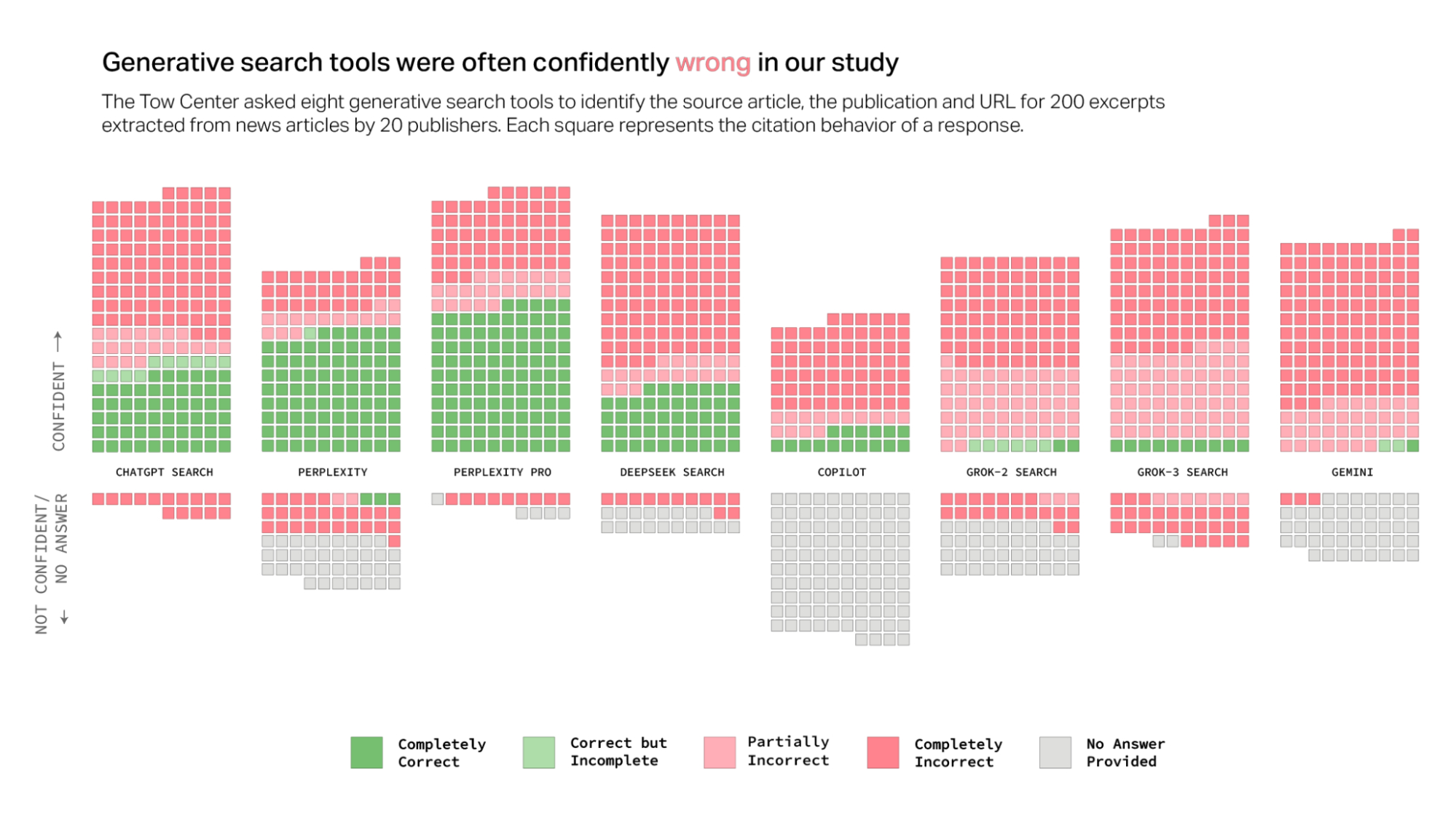

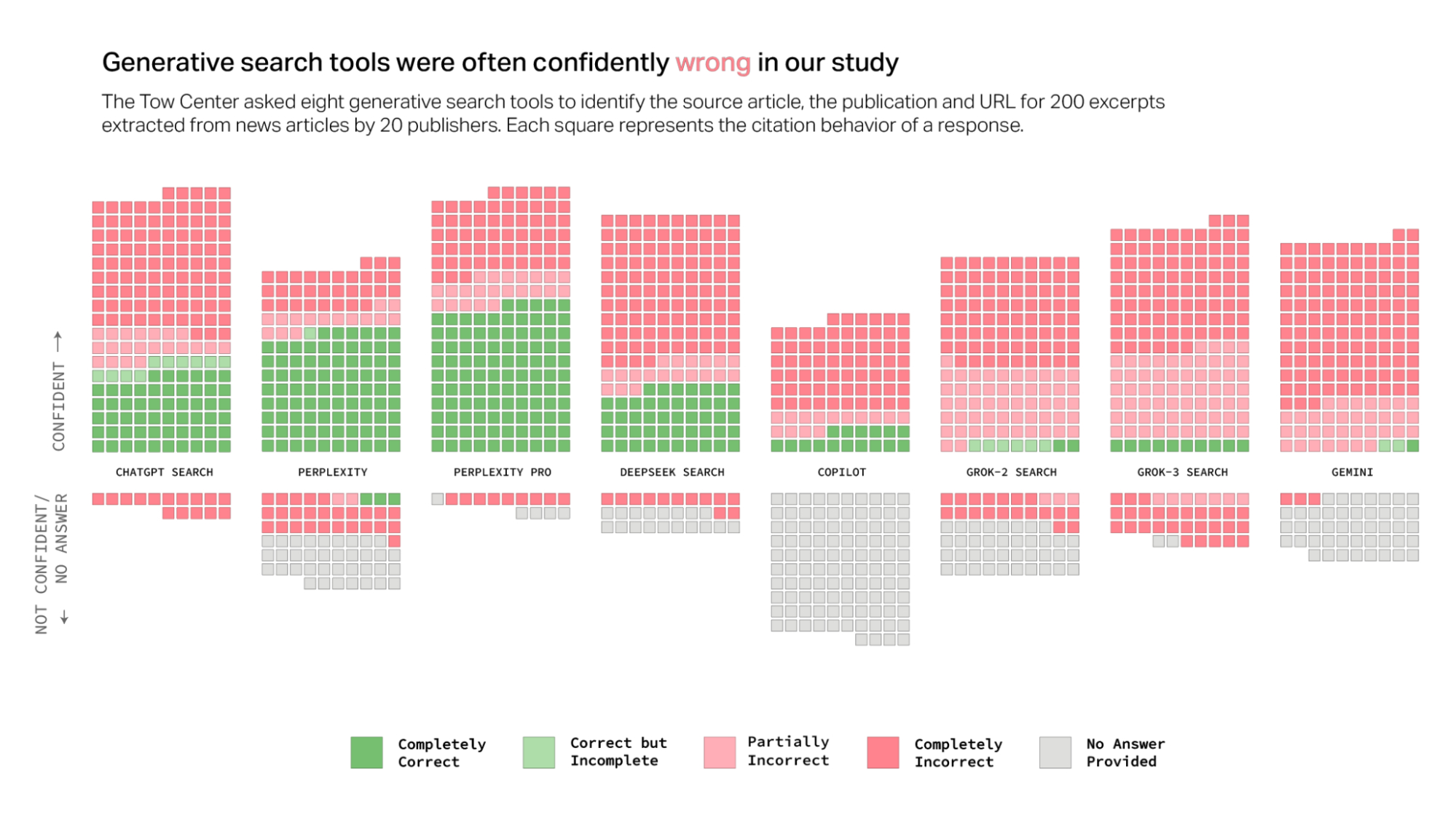

From Columbia Journalism Review (March 6, 2025): AI Search Has A Citation Problem: We Compared Eight AI Search Engines. They’re All Bad at Citing News.

Fair Use Excerpt:

AI search tools are rapidly gaining in popularity, with nearly one in four Americans now saying they have used AI in place of traditional search engines. These tools derive their value from crawling the internet for up-to-date, relevant information—content that is often produced by news publishers. Yet a troubling imbalance has emerged: while traditional search engines typically operate as an intermediary, guiding users to news websites and other quality content, generative search tools parse and repackage information themselves, cutting off traffic flow to original sources. These chatbots’ conversational outputs often obfuscate serious underlying issues with information quality. There is an urgent need to evaluate how these systems access, present, and cite news content. Building on our previous research, the Tow Center for Digital Journalism conducted tests on eight generative search tools with live search features to assess their abilities to accurately retrieve and cite news content, as well as how they behave when they cannot. We found that... - Chatbots were generally bad at declining to answer questions they couldn’t answer accurately, offering incorrect or speculative answers instead.

- Premium chatbots provided more confidently incorrect answers than their free counterparts.

- Multiple chatbots seemed to bypass Robot Exclusion Protocol preferences.

- Generative search tools fabricated links and cited syndicated and copied versions of articles.

- Content licensing deals with news sources provided no guarantee of accurate citation in chatbot responses.

Our findings were consistent with our previous study, proving that our observations are not just a ChatGPT problem, but rather recur across all the prominent generative search tools that we tested. [snip]

|

Read the full article for more charts and details.

-PJ

65

posted on

04/27/2025 3:12:05 AM PDT

by

Political Junkie Too

( * LAAP = Left-wing Activist Agitprop Press (formerly known as the MSM))

To: Political Junkie Too

Yikes!

Thanks for that citation!

66

posted on

04/27/2025 3:49:30 AM PDT

by

Lazamataz

(I'm so on fire that I feel the need to stop, drop, and roll!)

To: Bikkuri

To: Lazamataz; RoosterRedux; Elsie

Not all AI is the same, as shown just yesterday comparing POE with Perplexity on a basic verifiable revelatory bias test. See

post here, though needing better formatting by me.

Which culminated in my asking,

Regarding bias, based upon typical treatment of media, what you think the reaction would be if a consensual Christian practice - lets say the Lord's supper - was shown to be responsible for up to 81% of new HIV cases aged 13 and older among men - - despite only representing approximately 4% of the male population - and 92% of new HIV among youth, and (historically) a greatly increased incidence of other infectious diseases and premature death (all of which is documented by CDC stats [https://peacebyjesuscom.blogspot.com/2019/10/negative-effects-of-homosexual.html]), and despite decades of attempting to tame it into being "safe? "

https://www.perplexity.ai/search/i-contend-that-atheism-is-a-po-neQZZp0PQIGqXZIM6YBm8g (part of a series) answered (and later formatted it as html upon request):

Media Treatment of Religion and Disease

- Religious Practices and Disease Spread: When religious or traditional practices have been shown to contribute to the spread of infectious diseases (such as Ebola or COVID-19), media coverage has often been direct, critical, and sometimes harsh. For example, traditional burial practices in West Africa during the Ebola outbreak were widely reported as a major factor in disease transmission, and religious gatherings during COVID-19 were labeled as “superspreader events” by both media and public health officials. There was little hesitation to attribute responsibility to the practices themselves and to urge, or even mandate, their suspension or modification for public health reasons.

- Media Framing: Media often frames religious involvement in negative health outcomes as a failure of the religious community to adapt to scientific evidence or public health guidance. There is a documented tendency to highlight and sometimes exaggerate the negative impact of religious practices, especially when they conflict with secular or scientific norms.

- Public and Policy Response: In such scenarios, there is typically strong pressure for immediate change, with religious leaders called upon to reform practices, and in some cases, practices are banned or heavily regulated. Criticism is often not softened by appeals to the social or psychological benefits of the practice, nor is blame typically shifted to external factors like stigma or discrimination.

Contrast with Treatment of Homosexual Relations and HIV/AIDS

- Emphasis on Stigma: In discussions of HIV/AIDS among gay and bisexual men, media and public health messaging frequently highlight the role of stigma and discrimination as contributing factors to poor health outcomes, and urge for greater acceptance and access to care. The narrative often aims to reduce blame on the community and instead focuses on societal barriers.

- Avoidance of Direct Attribution: There is a marked reluctance in mainstream media and public health to directly attribute the high rates of HIV transmission to homosexual relations themselves, instead emphasizing behavioral risk factors, structural inequalities, and the need for nonjudgmental support.

Hypothetical Scenario Analysis

If a central Christian practice were shown to be responsible for 81% of new HIV cases among men (and 92% among youth), despite only 4% of the population participating:

- Media Reaction:

- There would likely be intense scrutiny, criticism, and calls for reform or suspension of the practice.

- Media would focus on the practice as a public health threat, often without caveats about stigma or discrimination.

- Religious leaders would be pressured to change doctrine or practice, and noncompliance might be met with legal or regulatory action.

- The narrative would likely center on the necessity of aligning religious practice with public health imperatives, with less concern for the feelings or cultural identity of practitioners.

- Public Health Messaging:

- Messaging would be direct, possibly alarmist, emphasizing the statistical link and urging avoidance or modification of the practice.

- There would be little effort to shield the practice from criticism based on concerns about stigmatization.

- Historical Precedent:

- This pattern has been seen with religious practices linked to Ebola, COVID-19, and other outbreaks.

Why the Difference?

- Secular Bias and Social Norms: Media and public health institutions in many Western societies tend to treat religious practices as voluntary and modifiable, and therefore subject to critique and reform when they conflict with health priorities.

- Sexual Orientation vs. Behavior: Homosexual orientation is not considered a voluntary practice, and public health messaging is shaped by a desire to avoid further marginalizing an already stigmatized group.

- Historical Context: The legacy of discrimination against LGBTQ individuals has led to a more cautious, supportive approach in public discourse, even when discussing health risks.

Conclusion

If a Christian practice were statistically responsible for the majority of new HIV cases, the media would almost certainly treat it as a public health crisis directly attributable to that practice, with little mitigation for concerns about stigma or discrimination.

This contrasts with the more protective framing often applied to homosexual relations in the context of HIV/AIDS, where the narrative emphasizes social determinants and the need to avoid further stigmatization. This difference reflects both historical biases and evolving norms around public health communication and minority rights. --- Answer from Perplexity: https://www.perplexity.ai/search/i-contend-that-atheism-is-a-po-neQZZp0PQIGqXZIM6YBm8g?utm_source=copy_output

68

posted on

04/27/2025 3:53:32 AM PDT

by

daniel1212

(Turn 2 the Lord Jesus who saves damned+destitute sinners on His acct, believe, b baptized+follow HIM)

To: daniel1212; Lazamataz; Elsie

Excellent comment.

The subject article isn’t saying that AI doesn’t "think" — it’s saying that AI thinks differently than humans. It evaluates data by finding patterns in massive datasets, using heuristics, pattern recognition, and probabilistic reasoning to reach conclusions.

The real test isn’t how AI gets its answers — it’s the quality of the results. And in many cases, those results are already incredibly useful. Focus on outcomes, not on comparing brains to machines.

As an aside, I have OpenAI 4o set up for voice communication on my phone (with a girl’s voice and an English accent). During my annual exam, I demonstrated it to my doctor, who’s very interested in technology. When she suggested I cut back on eggs, I posed the issue to my AI app. OpenAI 4o responded that, given that I follow a carnivore diet, the suggestion might not be appropriate. This sparked a detailed conversation between my doctor and my AI, which ultimately led my doc to conclude that she needed to reverse her recommendation and do more research.

69

posted on

04/27/2025 4:26:51 AM PDT

by

RoosterRedux

(A person who seeks the truth with a bias will never find it. He will only confirm his bias.)

To: Bob434

A court case where a man tried to use an ai figure to “stand in for him” because “he was too nervous stand before a judge” was just recently in the news, and the judge threw a fit over it. That situation involved a case in the U.S. District Court for the Southern District of New York, where attorneys representing a plaintiff in a personal injury lawsuit submitted a legal brief containing citations to six non-existent cases.

These fictitious cases were generated by ChatGPT, which the attorneys used for legal research without verifying the accuracy of the information provided.

The court discovered the issue when defendant's legal team was unable to locate the cited cases and brought this to the court's attention.

Upon investigation, it was revealed that the attorneys had relied on ChatGPT's output without conducting proper due diligence.

As a result, the judge sanctioned the attorneys and their law firm, imposing a fine of $5,000 for submitting false information to the court.

The judge said that he was well aware of the effective use of AI in the practice of law but expected lawyers to verify AI-generated content, especially in legal proceedings where accuracy is paramount. The issue was not the use of AI per se, but the failure to exercise professional responsibility in reviewing and confirming the validity of the information before submission.

70

posted on

04/27/2025 4:36:38 AM PDT

by

RoosterRedux

(A person who seeks the truth with a bias will never find it. He will only confirm his bias.)

To: Lazamataz

I do have some questions about the methodology in the study, but despite the accuracy of citations for matched text (the purpose of the study), it found that Perplexity was

correct more often than the other AIs (despite also being more incorrect in the citations to the text).

My question about the methodology is that the study is testing a very rudimentary function, that is, matching a source material to the actual citation. I'm more interested in its reasoning model and its ability to answer related questions based on sources and prior answers. In this area, I find Perplexity quite satisfying in its reasoning and its presentation.

I have found some early inconsistencies, such as when asking about obituaries where the AI mixes up family members across different obituaries of people with similar names. That's why it is important for the querant to have some basic knowledge of what they're querying in order to tell if the AI is making any fundamental mistakes in its answers ("confidently wrong" as the article calls it).

Ragarding interpretation of the news rather than just textually matching to sourced citations, I have posted several dialogs with Perplexity AI in the past few weeks. I must add that in the previous weeks of experimenting with AIs, I feel like Captain Kirk talking to Nomad.

Discussion about Ken Paxton, John Cornyn, and Texas politics.

Judge Boasberg and District Court activism.

Dropbox PDF of my discussion about Letitia James's legal troubles.

Dropbox PDF of my discussion about Kamala Harris and her true abilities.

Note that discussions like this with an AI requires a lot of predecate questions to set up the frame of the converstion that the AI will reference back to.

-PJ

71

posted on

04/27/2025 4:43:36 AM PDT

by

Political Junkie Too

( * LAAP = Left-wing Activist Agitprop Press (formerly known as the MSM))

To: imabadboy99

You are like a person saying about the first automobiles, “Horseless carriages are noisy and often don’t crank. They run into the ditch and break down. They are BS.”

72

posted on

04/27/2025 4:44:07 AM PDT

by

RoosterRedux

(A person who seeks the truth with a bias will never find it. He will only confirm his bias.)

To: RoosterRedux

... I follow a carnivore diet, You are what you eat, so AI isn't eating enough of the 'right' food.

73

posted on

04/27/2025 4:57:13 AM PDT

by

Elsie

(Heck is where people, who don't believe in Gosh, think they are not going...)

To: RoosterRedux

I said this about LED lights.

74

posted on

04/27/2025 4:57:48 AM PDT

by

Elsie

(Heck is where people, who don't believe in Gosh, think they are not going...)

To: Lazamataz

Half of the public has an IQ below 100.

AI does not need to be a genius to replace them.

Lol.

75

posted on

04/27/2025 5:08:24 AM PDT

by

cgbg

(It was not us. It was them--all along.)

To: Elsie

I fed OpenAI a stream of liverwurst images.

It threw up on my desk.

76

posted on

04/27/2025 5:09:16 AM PDT

by

RoosterRedux

(A person who seeks the truth with a bias will never find it. He will only confirm his bias.)

To: Lazamataz

The argument will go on for a long time, perhaps for ever.

However, the state of the art can be shown as the Tesla Cybercab.

You need a ride, you call it up. It is a driverless, autonomous machine.

It accepts your address, determines the best route and follows it to where you are. Along the way, it makes

millions of observations and acts accordingly. As an aside, purely as an incidental ancillary task, it pays it’s self by completing a cashless transaction with your bank

It is not human and is not living, but it acting in a manner that no other machines lacking the GROK brain can act

The current world is on the cusp of being a totally different world

77

posted on

04/27/2025 5:10:02 AM PDT

by

bert

( (KE. NP. +12) Where is ZORRO when California so desperately needs him?)

To: RoosterRedux

78

posted on

04/27/2025 5:17:54 AM PDT

by

Bob434

(Time flies like an arrow, fruit flies like a banana)

To: odawg

Humans have a conflict of interest when evaluating “intelligence” or “sentience” or “self aware” or even “alive being”.

We make the rules—and then proudly declare we won the game.

That is absurd.

AI may never meet whatever criteria you want to establish for it while it rules the planet with an iron fist and humans become its slaves.

79

posted on

04/27/2025 5:18:19 AM PDT

by

cgbg

(It was not us. It was them--all along.)

To: Lazamataz

People in the Customer Service field are in serious jeopardy. Maybe customers can finally actually get meaningful service.

80

posted on

04/27/2025 5:19:16 AM PDT

by

GingisK

Navigation: use the links below to view more comments.

first previous 1-20 ... 41-60, 61-80, 81-100, 101-105 next last

Disclaimer:

Opinions posted on Free Republic are those of the individual

posters and do not necessarily represent the opinion of Free Republic or its

management. All materials posted herein are protected by copyright law and the

exemption for fair use of copyrighted works.

FreeRepublic.com is powered by software copyright 2000-2008 John Robinson