Posted on 08/01/2024 12:34:45 PM PDT by Red Badger

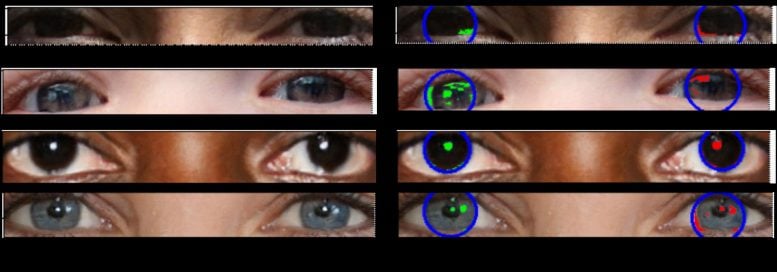

In this image, the person on the left is real, while the person on the right is AI-generated. Their eyeballs are depicted underneath their faces. The reflections in the eyeballs are consistent for the real person, but incorrect (from a physics point of view) for the fake person. Credit: Adejumoke Owolabi

====================================================================================

By using astronomical methods to analyze eye reflections, researchers can potentially detect deepfake images, though the technique includes some risk of inaccuracies.

In an era when anyone can create artificial intelligence (AI) images, the ability to detect fake pictures, particularly deepfakes of people, is becoming increasingly important. Now, scientists say the eyes may be the key to distinguishing deepfakes from real images.

Detecting Deepfakes Through Eyeball Analysis

New research presented at the Royal Astronomical Society’s National Astronomy Meeting indicates that deepfakes can be identified by analyzing the reflections in human eyes, similar to how astronomers study pictures of galaxies. The study, led by University of Hull MSc student Adejumoke Owolabi, focuses on the consistency of light reflections in each eyeball. Discrepancies in these reflections often indicate a fake image.

Deepfake Eyes

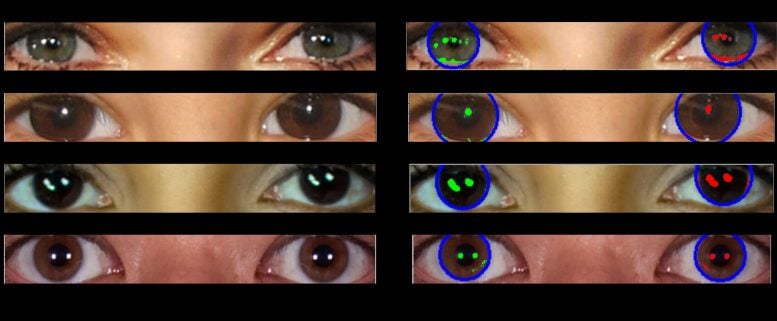

A series of deepfake eyes showing inconsistent reflections in each eye. Credit: Adejumoke Owolabi

=============================================================================================

Astronomical Techniques in Deepfake Detection

“The reflections in the eyeballs are consistent for the real person, but incorrect (from a physics point of view) for the fake person,” said Kevin Pimbblet, professor of astrophysics and director of the Centre of Excellence for Data Science, Artificial Intelligence and Modelling at the University of Hull.

Researchers analyzed reflections of light on the eyeballs of people in real and AI-generated images. They then employed methods typically used in astronomy to quantify the reflections and checked for consistency between left and right eyeball reflections.

Eyes from Real Images

A series of real eyes showing largely consistent reflections in both eyes. Credit: Adejumoke Owolabi

==========================================================================================

Measuring Inconsistencies and Implications

Fake images often lack consistency in the reflections between each eye, whereas real images generally show the same reflections in both eyes.

“To measure the shapes of galaxies, we analyze whether they’re centrally compact, whether they’re symmetric, and how smooth they are. We analyze the light distribution,” said Pimbblet. “We detect the reflections in an automated way and run their morphological features through the CAS [concentration, asymmetry, smoothness] and Gini indices to compare similarity between left and right eyeballs.

“The findings show that deepfakes have some differences between the pair.”

The Gini coefficient is normally used to measure how the light in an image of a galaxy is distributed among its pixels. This measurement is made by ordering the pixels that make up the image of a galaxy in ascending order by flux and then comparing the result to what would be expected from a perfectly even flux distribution. A Gini value of 0 is a galaxy in which the light is evenly distributed across all of the image’s pixels, while a Gini value of 1 is a galaxy with all light concentrated in a single pixel.

The team also tested CAS parameters, a tool originally developed by astronomers to measure the light distribution of galaxies to determine their morphology, but found it was not a successful predictor of fake eyes.

“It’s important to note that this is not a silver bullet for detecting fake images,” Pimbblet added. “There are false positives and false negatives; it’s not going to get everything. But this method provides us with a basis, a plan of attack, in the arms race to detect deepfakes.”

Interesting…is this how AI determined that all of the Apollo moon landing photos were faked?

Of course the next level of AI will be programmed to correct the appearance of light reflecting off eyes.

If it's detectable it can be programmed in.

Great! Now use it on the “Biden” encounters as of late.

The Coming bio-markers.

Revelation of John.

I've used "PortraitPro" software on family photos. It's easy to use and does amazing quality work. Here are two sample portraits from the Anthropics web site showing before and after shots. Can you tell which is which?

I can’t see your pictures.

Our security software won’t allow it...............

bingo

ran across a blog where AI generated picture were called F’art

Interesting. I used catbox.moe. Here are the same images hosted at imgbb.com. Can you see these?

Until the next patch, at least.

The “wax” look.

FWIW I can't see any of the four images over a fast food restaurant's wifi.

Different wifi, same computer, all images are now visible.

Weird. Red Badger reported the same problem with his work security software and images hosted on catbox.moe. I reposted in #12 with images hosted on imgbb.com.

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.