Posted on 06/27/2019 10:18:25 AM PDT by Red Badger

In 2019, you don’t have to be just afraid of hackers who may leak your closely guarded private photos. A newly developed artificial neural network only needs to be fed a normal picture to replace your clothes with what's under them.

An anonymous ‘technology enthusiast’ has created an app which is able to undress a fully clothed person within a couple of clicks, triggering concerns over the ethics of such technology and non-consensual photo sharing.

The app in question is called DeepNude – a play on the new term deepfake – an AI-assisted technology that superimposes faces onto other bodies in both photos and videos, potentially allowing users to create revenge porn, for example.

The website where one can download the app was launched in late March, according to the Twitter account of the enigmatic creator. The app is available in two versions, premium and free.

According to a report by Vice, one does not have to be tech-savvy to use this sexually oriented, automated version of Photoshop. Curious users only have to upload the photo they want to “undress”, click on the button and wait some 30 second until the AI processes it.

Horrifying deepfake app called DeepNude realistically removes the clothing of any woman with a single click. It costs $50 and only works on women. (The black bars were added after using the app.) pic.twitter.com/5KS36FPTqZ — Mike Sington (@MikeSington) 27 июня 2019 г.

DeepNude appears to work only with high-resolution photos of women facing the camera directly, swapping their clothes for a nude body, regardless of what they are wearing. Tests with images of men, cartoon characters or people photographed from unnatural angles and/or in low resolution or poor lighting conditions are said to produce varying results, from somewhat comical to outright disgusting.

Furthermore, according to screenshots taken by Vice contributors, the free version produces an image with a huge dartboard-like watermark. If upgraded to premium for $50, DeepNude is able to produce uncensored photos with a clearly visible ‘Fake’ inscription.

The app currently runs on Windows 10 and Linux, while a Mac version is in the works.

The creator, who requested to be identified as Alberto, told Vice that his brainchild uses an algorithm called the Conditional Adversarial Network (cGAN), which was trained to generate naked images after learning from a dataset of more than 10,000 nude photos of women.

"I'm not a voyeur, I'm a technology enthusiast,” he was quoted as saying. “Continuing to improve the algorithm. Recently, also due to previous failures (other startups) and economic problems, I asked myself if I could have an economic return from this algorithm. That's why I created DeepNude."

"This is absolutely terrifying," said Katelyn Bowden, founder and CEO of non-profit Badass, which fights revenge porn and image abuse. "Now anyone could find themselves a victim of revenge porn, without ever having taken a nude photo. This tech should not be available to the public."

Danielle Citron, professor of law at the University of Maryland Francis King Carey School of Law, called the technology an “invasion of sexual privacy.”

But the creator insisted that DeepNude doesn't make much of a difference and was no more harmful than Photoshop, which effectively just takes more time to achieve the same results.

Shortly after the initial report in Vice, DeepNude’s Twitter said the yet-unstable website was down due to an unexpected increase in traffic. It was unavailable as of the time of writing.

Hi! DeepNude is offline. Why? Because we did not expect these visits and our servers need reinforcement. We are a small team. We need to fix some bugs and catch our breath. We are working to make DeepNude stable and working. We will be back online soon in a few days. — deepnudeapp (@deepnudeapp) 27 июня 2019 г.

And somebody will create an app to eliminate the "fake" watermark from the output image.

But will it be able to tell if it's processing an image of a 13 year old girl? I can see various law enforcement people demanding a feed of what it does.

Next stage would be to be able to download the app, to process images directly on your PC, so that nothing transits the Cloud.

I’d hit it.

You make some real valid points heh.

I laughed harder than I should of lol.

HILLArious!

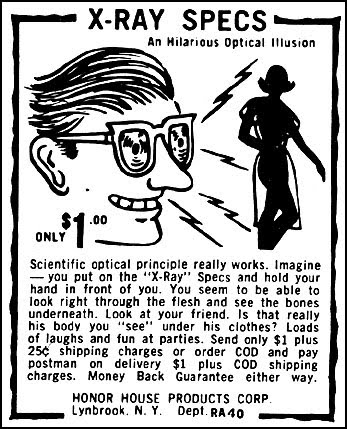

I always wanted me a pair of those.................still do!.............

You must be comfortable indeed in your own skin. I went to a Rush TV filming once, and the camera panned the audience at the start, and zoomed in on one person - me!I saw that happen, and positively knew that I was fully shown in the opening before the start of the show. And when I got home, my wife told me she had taped it, and that I was right there in the opening scene of the audience. Which, I repeat, I positively knew was happening at the time.

So I watched the tape - and I wasn’t in it at all! I rewound it again and again, and I couldn’t see myself. I think my wife had to point my own image out to me! And there it was, “big as life” (we didn’t have a big flat-screen TV back then, of course - or that literally would have been true).

We look in mirrors, but we don’t really see ourselves. My wife recently had a reason to take a selfie - and recoiled from it in horror. Because - as I understand from my much earlier (and younger) experience - we just don’t see ourselves as others see us. Mirror, schmirror - we just don’t.

So I venture the guess that your husband would like a poster like that much more than you would (and I sure pity the poor schlub if he didn’t, and actually admitted it!). If I had such of my wife, I would simply complain that it doesn’t do her justice - entirely too flat, and not nearly as soft as the real thing!

I was 4 years old...................

That’s almost like the time TV Guide took a photo of Ann Margret and put Oprah’s head on it. Sacrilege-Sacrilege.

did somone copy one of the pictures for the other?

Yes, they used an old photo of Ann Margaret and put Oprah’s head on it...............

WTH? That's a crime against humanity!

-PJ

How many mega pixels can it imagine?

The article said HD, so whatever that is...........

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.