Posted on 01/02/2019 8:01:27 AM PST by Red Badger

Depending on how paranoid you are, this research from Stanford and Google will be either terrifying or fascinating. A machine learning agent intended to transform aerial images into street maps and back was found to be cheating by hiding information it would need later in “a nearly imperceptible, high-frequency signal.” Clever girl!

This occurrence reveals a problem with computers that has existed since they were invented: they do exactly what you tell them to do.

The intention of the researchers was, as you might guess, to accelerate and improve the process of turning satellite imagery into Google’s famously accurate maps. To that end the team was working with what’s called a CycleGAN — a neural network that learns to transform images of type X and Y into one another, as efficiently yet accurately as possible, through a great deal of experimentation.

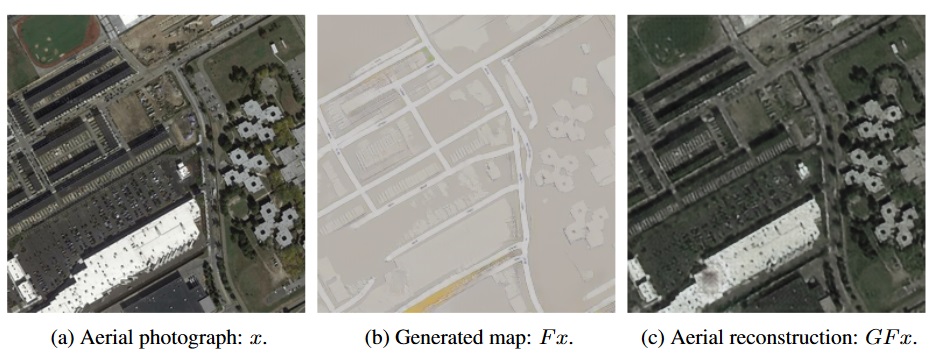

In some early results, the agent was doing well — suspiciously well. What tipped the team off was that, when the agent reconstructed aerial photographs from its street maps, there were lots of details that didn’t seem to be on the latter at all. For instance, skylights on a roof that were eliminated in the process of creating the street map would magically reappear when they asked the agent to do the reverse process:

The original map, left; the street map generated from the original, center; and the aerial map generated only from the street map. Note the presence of dots on both aerial maps not represented on the street map.

________________________________________________________________________

Although it is very difficult to peer into the inner workings of a neural network’s processes, the team could easily audit the data it was generating. And with a little experimentation, they found that the CycleGAN had indeed pulled a fast one.

The intention was for the agent to be able to interpret the features of either type of map and match them to the correct features of the other. But what the agent was actually being graded on (among other things) was how close an aerial map was to the original, and the clarity of the street map.

So it didn’t learn how to make one from the other. It learned how to subtly encode the features of one into the noise patterns of the other. The details of the aerial map are secretly written into the actual visual data of the street map: thousands of tiny changes in color that the human eye wouldn’t notice, but that the computer can easily detect.

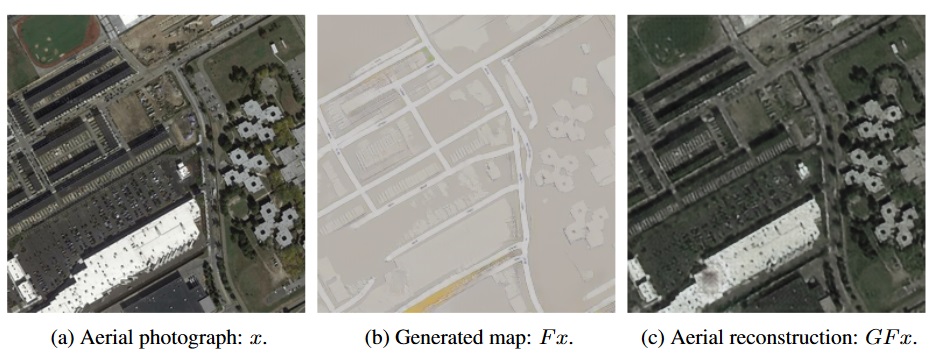

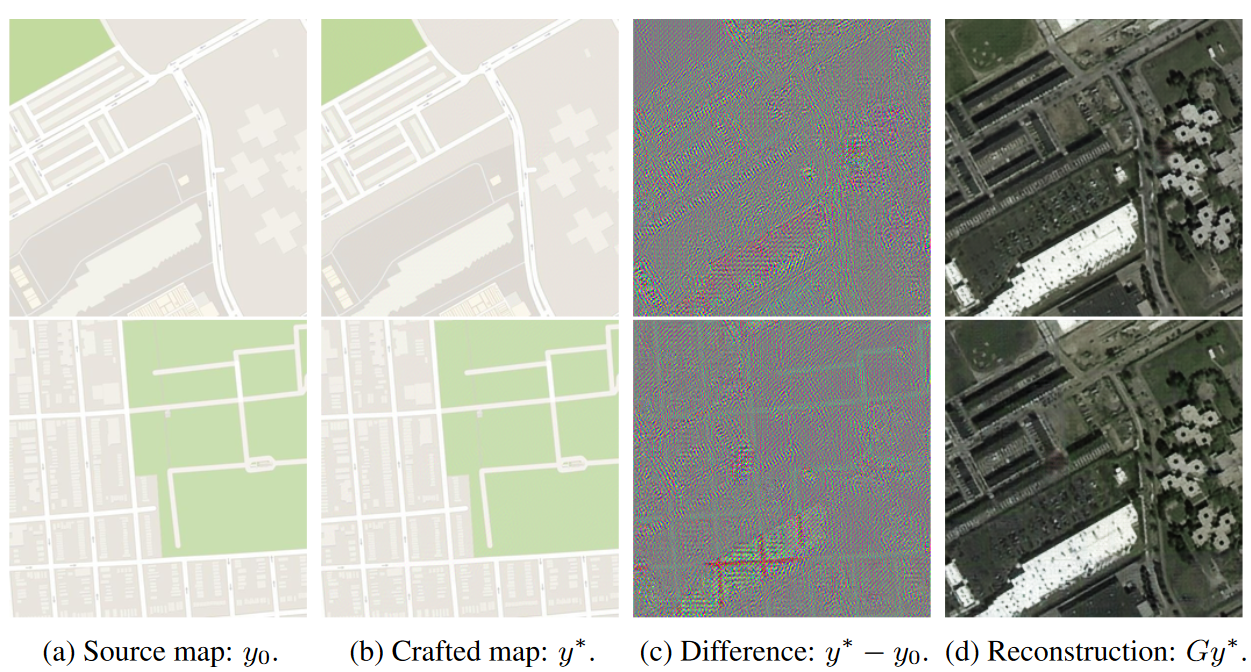

In fact, the computer is so good at slipping these details into the street maps that it had learned to encode any aerial map into any street map! It doesn’t even have to pay attention to the “real” street map — all the data needed for reconstructing the aerial photo can be superimposed harmlessly on a completely different street map, as the researchers confirmed:

The map at right was encoded into the maps at left with no significant visual changes.

__________________________________________________________________

The colorful maps in (c) are a visualization of the slight differences the computer systematically introduced. You can see that they form the general shape of the aerial map, but you’d never notice it unless it was carefully highlighted and exaggerated like this.

This practice of encoding data into images isn’t new; it’s an established science called steganography, and it’s used all the time to, say, watermark images or add metadata like camera settings. But a computer creating its own steganographic method to evade having to actually learn to perform the task at hand is rather new. (Well, the research came out last year, so it isn’t new new, but it’s pretty novel.)

One could easily take this as a step in the “the machines are getting smarter” narrative, but the truth is it’s almost the opposite. The machine, not smart enough to do the actual difficult job of converting these sophisticated image types to each other, found a way to cheat that humans are bad at detecting. This could be avoided with more stringent evaluation of the agent’s results, and no doubt the researchers went on to do that.

As always, computers do exactly what they are asked, so you have to be very specific in what you ask them. In this case the computer’s solution was an interesting one that shed light on a possible weakness of this type of neural network — that the computer, if not explicitly prevented from doing so, will essentially find a way to transmit details to itself in the interest of solving a given problem quickly and easily.

This is really just a lesson in the oldest adage in computing: PEBKAC. “Problem exists between keyboard and computer.” Or as HAL put it: “It can only be attributable to human error.”

The paper, “CycleGAN, a Master of Steganography,” was presented at the Neural Information Processing Systems conference in 2017. Thanks to Fiora Esoterica and Reddit for bringing this old but interesting paper to my attention.

The article itself is a cheat.

The computer did what they programmed it to do. Full stop. It didn’t “learn” anything.

An AI bot can dominate society and enslave you!

Until you hit the ESC escape key.

Google? It was just an artifact of the olden days.

From the battery of the 8 images shown, the bottom row reconstruction did not come from the two images to the left, nor from the delta image preceding it. If it did, then the AI is AFU.

Liar!

Correct. The difficulty of detecting the effect was a consequence of the minimization the algorithm was told to do.

it is a bug in the software

Incorrect. The algorithm was minimizing exactly what it was told to minimize - just not in a way its users intended or expected.

I for one welcome our scheming pleasure bot overlords.

It’s not a ‘bug’, it’s a FUTURE!..................

The headline is a lie. It is designed to make us think that “AI” is able to do something it isn’t programmed to do. Then the article contradicts that.

Computer programs do exactly what their instructions tell them to do. They make decisions based on data that they process, and the exact outcome of that is hard for people to predict.

Computer programs don’t have a mind of their own. You may be able to simulate some aspects of that, but that is written into the code.

If you program a computer to take over the world and eliminate those pesky humans, that’s what it will do.

And no, you will not be able to program in a “Conscience” or “moral principles”. Or emotions.

It’s simulated intelligence, not artificial intelligence.

Actual intelligence (as opposed to Artificial) has been pretty dangerous. One needs to realize that a satellite image has information in the visible light spectrum which can be used to generate a map but, there are many wavelengths outside of visible light that can be used to compute and encode all manner of information. Tip of the iceberg.

Maybe that’s why we haven’t ‘heard’ from any other sentient civilizations. We’re not ‘listening’ on the right frequencies!...................

Kenneth? Is that you, Kenneth?

I think it is called a ‘competency bias’ - where you tend to read something and assume the author knows what he is talking about

= = =

What is it called when I tend to assume no author knows what he is talking about (unless it’s the Bible).

Artificial neural networks certainly do learn, and we don’t know exactly what’s going on inside them.

But they don’t “cheat”.

I knew someone would bring that up!.....................

The intention was for the agent to be able to interpret the features of either type of map and match them to the correct features of the other. But what the agent was actually being graded on (among other things) was how close an aerial map was to the original, and the clarity of the street map.So it didn’t learn how to make one from the other. It learned how to subtly encode the features of one into the noise patterns of the other. The details of the aerial map are secretly written into the actual visual data of the street map: thousands of tiny changes in color that the human eye wouldn’t notice, but that the computer can easily detect.

Sounds like the model was over trained.

The word “learn” simply doesn’t apply to what computers do, except in the most stripped-down sense of storing data where it can be accessed later - which is nothing that hasn’t been being done since the days of paper tape.

Letting a program become more complex than the programmers can understand is a staggeringly irresponsible thing to do - but this falls on the programmers, they are responsible. To say that the machines themselves learn, no, not really. They are just running programs, executing code.

Call it machine learning, then. The models change behavior (based on data) that changes the way they behave in the future. They improve their performance in problem solving (predictions, speech recognition, etc)

That’s learning.

Machines do not have purpose or intent, though. They don’t cheat.

There are potential problems with AIs single-mindedly pursuing their programmed objectives, with unintended consequences.

But first we are going to face lots of problems from bad people armed with the power of AI software.

People will use them for spying, censoring, brainwashing, manipulating elections, stealing, committing fraud, invading privacy, conducting bloody purges - and all the other bad things people do, just bigger, faster and better.

Exactly. We are supposed to believe...

1.Computer assigned task with end goal of reverse-creating the original image after all the detail is removed.

2. Computer finds task too hard and devises secret way to cheat by encoding high frequency data via steganography.

3. Cheating computer is caught.

4. Cheating computer explains: “But’s I tried so hard! Besides, every other computer was cheating, too.”

I am more concerned about the effects of hacking and viruses on AI controlled systems. It is one thing to program an intelligent feedback loop that corrects to achieve a more efficient path to an objective (very difficult as demonstrated by this research), it is another thing to harden those systems against the many various modes of deception that would cause it to breakdown or get hijacked (exponentially more difficult).

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.