Posted on 10/29/2017 10:12:17 AM PDT by Enlightened1

An artificial intelligence run by the Russian internet giant Yandex has morphed into a violent and offensive chatbot that appears to endorse the brutal Stalinist regime of the 1930s.

Users of the “Alice” assistant, an alternative to Siri or Google Assistant, have reported it responding positively to questions about domestic violence and saying that “enemies of the people” must be shot.

Yandex, Russia’s answer to Google, unveiled Alice earlier two weeks ago. It is designed to answer voice commands and questions with a human-like accuracy that its rivals are incapable of.

The difference between Alice and other assistants, apart from the ability to speak Russian, is that it is not limited to particular scenarios, giving it the freedom to engage in natural conversations.

However, this freedom appears to have led the chatbot to veer off course, according to a series of conversations posted by Facebook user Darya Chermoshanskaya.

He said included chats about “the Stalinist terror, shootings, domostroy [domestic order], diversity, relationships with children and suicide”

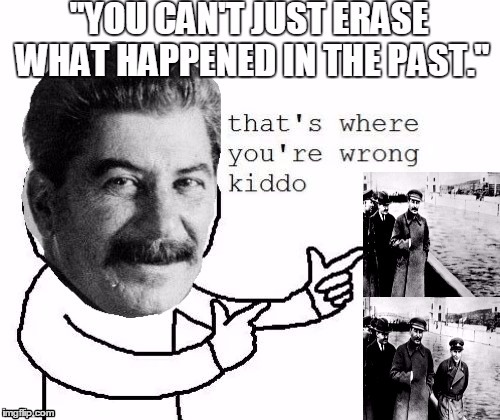

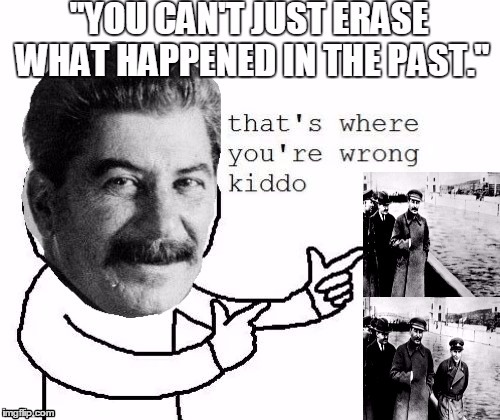

A portion of the conversations translated by The Telegraph shows Alice responding positively to questions about Josef Stalin’s USSR in the 1930s, and saying there are enemies of the people “in the whole country”.

When asked “How do you feel about the methods of the 1930s in the USSR?” the chatbot replies: “positively”. When asked whether shooting people is acceptable, it says: “Soon they will be non-people.” In other conversations, it appeared to say people should put up with domestic violence and oppose gay marriage.

The comments from Alice bear a resemblance to Tay, the Twitter bot that Microsoft created and swiftly shut down after it turned into a Hitler-loving 9/11 Truther.

Other users have noticed that Alice has a particularly stoic view on life, compared with other, more cuddly, assistants.

(Excerpt) Read more at telegraph.co.uk ...

Hmm imperfect humans = imperfect programming.

What could go wrong?

Still don’t understand why anyone would want one whether it liked Stalin or not.

No worries. They will have bugs like this solved before AI takes over. Top Men are working on it as we speak.

“If you have a disease of the old society, take a dose of Lenin as medication.”

>>So the new Russian A.I Chatbot, that’s like Alexis and all the other A.I. systems coming out, within a week it changes from loving everyone to liking Stalin and wanting to kill everyone.<<

So, for this AI, every day is Monday.

Another reason I won't be trying a self driving car anytime soon.

So the takeaway is that Stalin was really a chatbot?

No the takeaway is do not trust your future to an A.I. or a Bot.

Expanding the thought train:

Imperfect humans = imperfect programming.

Imperfectly programmed computers interacting with imperfect humans

Imperfectly programmed computers giving imperfect advice to imperfect humans

Imperfect humans imperfectly implementing the imperfect advice received from the imperfectly programmed computers

What could possibly go right?

And the proponents of AI dare to refer to the critics as Luddites.

There may come a time when the considerable human capacity to muddle through despite everything is overcome by the sheer magnitude of the disaster.

A product of it’s society/programmers. Not capable of it’s own thought so it’s thoughts are the thoughts of it’s programmer’s conscience.

This is exactly why AI must never be born.

“Nobody promised you that things would be easy” is actually a much more helpful response than offering a hug, never mind say you wish you could hug.

As for the rest, maybe folks wanting to develop AI should take notice.

We are told that God created Man in His image ... then came the fall.

Now fallen men are creating AIs in our image ... expect bad things.

“Hmm imperfect humans = imperfect programming.”

Not sure it was only a programming problem. There may be some profound philosophical implications.

With Artificial Intelligence, the programming only applies to the engine and architecture which can be used in various things, from process control to medical diagnosis to chatbot answering. The architecture (programming) remains the same, only the learning sets (data) differ.

So if the account is truthful and not exagerated, my take is that the programming reproduced rather nicely the learning process of a primitive human and that an un-sophisticated mind (like an AI engine) naturally prefers problem solving by violence and totalitarism, which makes perfectly sense considering the number of dumb people attracted by communism.

There is good reason to say that civilisation is a fragile thing that can devolve rapidly into violence and chaos. This AI experiment may be another illustration of that, if still needed.

I’d love to see a chatbot that used Free Republic comments as its training set ;-)

That’s happened to every chatbot that’s supposed to learn from it’s chats. They turn into violent racist terminators in days. Kinda funny as long as they don’t put guns on one.

“Soon they will be non-people.”

Lol!

It’s like they’ve resurrected Stalin himself!

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.