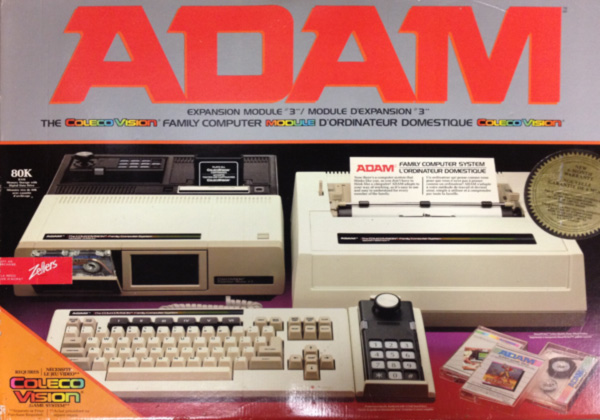

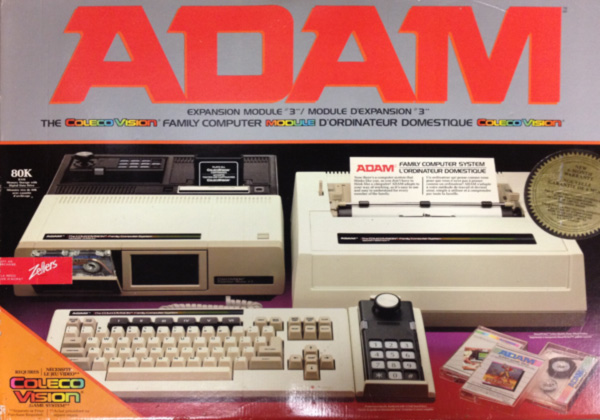

The original Adam processor was a Z-80.

Posted on 04/20/2021 6:45:24 PM PDT by anthropocene_x

Apple just launched its first custom processor for computers. The M1 chip is similar to the A-series processors inside the iPhone and iPad, and it powers just two devices: The late 2020 MacBook Air and MacBook Pro. And yet, Intel is still terrified, having mounted a massive ad campaign in an attempt to convince the world that the M1 MacBooks can’t stand up to Windows 10 laptops running on Intel hardware.

The campaign was somewhat half-baked, and has since drawn criticism and ridicule for its missteps. The M1 MacBooks offer formidable performance and excellent battery life, with M1 being built on a chip technology unavailable to Intel. If anything, Intel’s campaign is drawing more attention to the potential of ARM processors when it comes to notebooks and desktops. And Intel’s ARM nightmare is only just beginning.

(Excerpt) Read more at bgr.com ...

I tried to stay out of the ‘fan’ (fab) at all costs; I did DC and RF on-wafer testing (before dice and mount), design review for testabiliy, wrote the FORTRAN code for the Keithley VIMS and the HP RF test equip ...

“I recently bought my daughter a new M1-powered laptop”

Santa brought a new MacBook Pro M1 to Moe. Love it so far.

Bought a MacBook M1 2 months ago.

Goodbye, Intel - your lunch has been eaten. I do high-end computing and it's not even a contest, plus I can literally leave my laptop unplugged for well over a day without even thinking of needing a charge. Multiple days if I'm only using it in a general way.

I still haven't heard the fan come on - even under the highest loads. My Intel machines keep the house warm during the winter.

PS - I'm the furthest thing from a Mac fanboi...

“ it powers just two devices: The late 2020 MacBook Air and MacBook Pro”

And, as of today, the iPad.

Yeah, I should have said, ‘never did anything worthwhile with it.’

Had Microsoft not lost the phone war to Google so badly and early, maybe they could have been the incentive Intel needed to develop their ARM product, exactly like Apple did. But, that Atom processor was so horrible, there really wasn’t a device that it could have been developed in.

...and the Mac Mini which now sells for $699.

With M1 now running iPad, I expect Xcode for iPad come WWDC.

I’ll dump Mac for iOS (as a developer) and not look back.

(Dumped Windows years ago.)

I expect to see everything running on this architecture in 10 to 15 years and Windows OS will be just another trashy niche OS for hobbyists and Microsoft will focus on Azure and Microsoft Office as their main revenue streams.

———————

You forgot one huge market segment. Games. Last year, a respectable $37.4 Billion, followed by Consoles (Intel-based) at $51.2 Billion.

Very few people game on a Mac (cost of entry is several times that of a comparable equipped PC). Very few game developers will develop games for this platform (MacOS 10.x is 2.11% of all platforms used, according to Steam)

Likewise, the Linux share of the games market is so small, it’s not worth mentioning (only 0.9% of all platforms used, according to Steam)

Compare the benchmarks, the M1 will displace x86 on performance alone, and low power is always a plus.

This is what you can do when you have billions of iPhone dollars to invest in R&D...

The Z-80 was such a great processor for its day. Did tons of assembly language with it.

Good thing my Apple products last for more than 10 years then. The M1 chips are basically the same thing that has been running in iPads and iPhones for years, but you are free to waste money on anything you want to, at least for a year or two more.

The Z-80 was such a great processor for its day. Did tons of assembly language with it.

————

6502 coder here...

Parallels is up and running faster than ever before. The biggest problem for the M1 is cheap Ram and SSD, Apple premium there although the do sport Tunderbolt speed on external SSD.

Good thing my Apple products last for more than 10 years then. The M1 chips are basically the same thing that has been running in iPads and iPhones for years, but you are free to waste money on anything you want to, at least for a year or two more.

———-

A dropped iPhone with a cracked screen won’t. And I’m at 94% max battery life on my iPhone 11 Pro Max... lucky I still have AppleCare.

Can’t upgrade once you buy. That sucks.

With My 2018 MSI Windows Gamer laptop, I’ve already upgraded to memory to 128GB, the SSD to 8TB and I’m thinking of upgrading the dual Nvidia 1080s to dual 3080s. It will last me years and not operate like a 2018 machine in 2028, if I’m still using it.

Just like almost any Windows PC. Upgrade as needed/ wanted.

Try upgrading anything in any Apple product, except perhaps the Mac Pro.

Dann You Autocorrect.

Graphics performance on any real AAA game that eats CUDA and GPU cores for lunch is a huge issue. That M1 8-core integrated GPU is very low end. (Sane as an entry-level Nvidia GTX 1650).

I’ll wait maybe 5 years.

Same with rendering. I tried the e-GPU route once. Worked beautifully on a windows laptop with thunderbolt. (Was even able to use the external and internal cards in SLI mode). On a MBP, issue after issue after issue.

I agree with you somewhat that Intel has rested on its laurels for too long. I’m glad that there is healthy competition in the market. I’m not particularly interested in fragmentation due to incompatibilities.

I don’t really follow the CPU market to any kind of detail, as I tend to buy a computer once every 10 years. It has been clear for some time that in the Intel world the improvements from one generation to the next is really, really incremental. That is at least somewhat to be expected when a market is somewhat mature I suppose, but I’m just not all that happy with the state of any of this stuff.

Guess I’m spoiled at having seen the huge leaps from the 8086, 286, 386, to the pentium. Not a great deal of difference I’m seeing these days from the i7 that was out more than a decade ago (that’s what my last desktop was), to the current i9. Yeah, better cache, pipelining, and more cores, but most software really doesn’t take intelligent advantage of that. Don’t know if the failure is in the software or the hardware. I suspect the former.

I currently have a desktop with 16 cores, and 32GB of ram. It will really handle anything I can conceivably throw at it in my foreseeable future. Overall I’m pleased with the insane amount of computational power I have right here on my desk, but I kinda yearn for the days of huge leaps. I suspect at this point I’ll be eternally disappointed, as we’re already pushing the limits of the universe itself.

The speed of light limits how fast information can travel across a chip. Quantum mechanics limits how small you can make things until the physics gets weird.

Quantum computing is still a tinker toy. Maybe it will get better, but for general-purpose computing, I’m not sure how much that will help.

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.