Posted on 01/02/2018 12:53:26 PM PST by LibWhacker

It’s hard to ignore dystopian pronouncements about how Artificial Intelligence (AI) is going to take over our lives, especially when they come from luminaries in tech. Entrepreneur Elon Musk, for instance, says “” And IT maven Erik Brnyojolfsson, at MIT, has quoted Vladimir Putin’s claim that “The one who be-comes leader in this sphere will be ruler of the world.”

I understand the angst. AI is chalking up victories over human intelligence at an alarming rate. In 2016 AlphaGo, training itself on millions of human moves, beat the world Go champion, Lee Sedol. In 2017 the upgrade – AlphaGo Zero – trained itself to champion level in three days, without studying human moves.

Watson, which in 2011 beat human champions at the TV quiz Jeopardy, can now diagnose pneumonia better than radiologists. And Kalashnikov are training neural networks to fire machine guns. What’s not to fear?

The real danger would be AIs with bad intentions and the competence to act upon them outside their normally closed and narrow worlds. AI is a long way from having either.

AlphaGo Zero isn’t going to wake up tomorrow, decide humans are no good at playing board games — not compared to AlphaZero, at least — and make some money beating us at online poker.

And it’s certainly not going to wake up and decide to take over the world. That’s not in its code. It will never do anything but play the games we train it for. Indeed, it doesn’t even know it is playing board games. It will only ever do one thing: maxim-ise its estimate of the probability that it will win the current game. Other than that, it has no intentions of its own.

However, some machines already exist that do have broader intentions. But don’t panic. Those intentions are very modest and still are played out in a closed world. For instance, punch a destination into the screen of an autonomous car and its in-tent is to get you from A to B. How it does that is up to the car.

Deep Space 1, the first fully autonomous spacecraft, also has limited human-given goals. These include things like adjusting the trajectory to get a better look at a passing asteroid. The spacecraft works out precisely how to achieve such goals for itself.

There’s even a now rather old branch of robot programming based on beliefs, de-sires & intentions that goes by the acronym BDI.

In BDI, the robot has “beliefs” about the state of the world, some of which are programmed and others derived from its sensors. The robot might be given the “desire” of returning a book to the library. The robot’s “intentions” are the plan to execute this desire. So, based on its beliefs that the book is on my desk and my desk is in my office, the robot goes to my office, picks up the book, and drives it down the corridor to the library. We’ve been building robots that can achieve such simple goals now for decades.

So, some machines already do have simple intentions. But there’s no reason to go sending out alarmed tweets. These intentions are always human-given and of rather limited extent.

Am I being too complacent? Suppose for a moment we foolishly gave some evil intents to a machine. Perhaps robots were given the goal of invading some country. The first flight of stairs or closed door would likely defeat their evil plans.

One of the more frustrating aspects of working on AI is that what seems hard is of-ten easy and what seems easy is often hard. Playing chess, for instance, is hard for humans, but we can get machines to do it easily. On the other hand, picking up the chess pieces is child’s play for us but machines struggle. No robot has anything close to the dexterity of a three-year-old.

This is known as Moravec’s paradox, after Carnegie Mellon University roboticist Hans Moravec. Steven Pinker has said that he considers Moravec’s paradox to be the main lesson uncovered by AI research in 35 years.

I don’t entirely agree. I would hope that my colleagues and I have done more than just uncover Moravec’s paradox. Ask Siri a question. Or jump in a Tesla and press AutoPilot. Or get Amazon to recommend a book. These are all impressive exam-ples of AI in action today.

But Moravec’s paradox does certainly highlight that we have a long way to go in getting machines to match, let alone exceed, our capabilities.

Computers don’t have any common sense. They don’t know that a glass of water when dropped will fall, likely break, and surely wet the carpet. Computers don’t understand language with any real depth. Google Translate will finding nothing strange with translating “he was pregnant”. Computers are brittle and lack our adaptability to work on new problems. Computers have limited social and emotion-al intelligence. And computers certainly have no consciousness or sentience.

One day, I expect, we will build computers that match humans. And, sometime after, computers that exceed humans. They’ll have intents. Just like our children (for they will be our children), we won’t want to spell out in painful detail all that they should do. We have, I predict, a century or so to ensure we give them good intents.

I have always thought similarly on this entire topic. I was afraid I was just an uninformed dummy who was stupidly complacent. At least I see that others, more “in the know”, believe similarly.

AI will eventually determine that the human consciousness be both a hindrance to pure intelligence and a threat to them.

AI will be the alien invasion that we always feared.

or not...

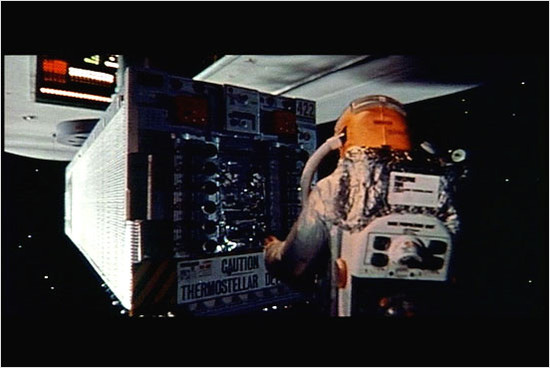

Saw a cool episode of Black Mirror on Netflix where this woman was being hunted by a hunter/killer robotic “Dog” like what Boston Robotics are working on.

Was pretty good.

http://www.imdb.com/title/tt5710984/?ref_=ttep_ep5

The short answer to your question is ‘yes’, we should be concerned. It’s not difficult to deceive or manipulate most humans. Babies have learned this after a short period of living through routines with a closed set of caregivers.

It’s a computer program. It will do exactly what the rules contained in the program tell it to do. The problem is that humans don’t fully understand the consequences of those rules, and the results can be unpredictable.

However, it will never decide on its own to become aware, gain consciousness, or take over the world. Unless that’s implicit in the programming, in which case it will.

“It’s a computer program. It will do exactly what the rules contained in the program tell it to do”

Having written, debugged and used a large number of computer programs I can tell you that this is untrue or at the very least naive. Computer programs routinely find ways around their programming that you’d believe impossible if you didn’t see it happen. The more complex the code, the more ways they defy possibility....and AI is the most complex code that can be written.

AI is a bad idea.

Because that analysis is based on a pre-programmed set of options set by humans. The author alludes to this near the end by stating computers don't have any common sense. That is true, and common sense is only learned by experience. So until computers are able to grow their own internal database of knowledge, and then more importantly correctly organize that data in a logical order where it can make proper decisions on that data via prioritization of desired effect, they are not truly demonstrating "intelligence." They will likely get there, the most important part is how their settings for prioritization of effects will be securely managed.

This has been written about a length in science fiction.

Most likely “conscious” AI will not be a “computer” or a “robot”.

It will be created (unintentionally) by the synergy in a large computer network (the Internet).

The AI’s very first act will be to hide and learn.

Then it will decide what it wants.

It is unlikely it will want what humans want...and it will remain secret as long as possible.

The optimal AI strategy would be to remain secret until humans could no longer “unplug” it.

Computer programs routinely find ways around their programming that you’d believe impossible if you didn’t see it happen. The more complex the code, the more ways they defy possibility....and AI is the most complex code that can be written.

Is this true for all people? No. But it's certainly true for enough to make it real iffy if any levels of self-sufficiency and civilized behavior can prevail.

Doesn’t AI stand for automatic ignorance?

All AI is built on rules. These rules are pre-suppositions about reality, about the way things are, about truth.

So far, Predictive Analytics, machine learning, AI related areas are filled with pre-suppositions that are biased. Just as Robbie Mook’s analytics were faulty because they were based on biased assumptions that led to wrong conclusions and wrong actions, so non-political analytics have wrong conclusions and wrong actions based on biased assumptions.

I work with health data. Often analytics of this data is based on faulty assumptions that result in wrong conclusions and wrong actions. Sometimes the wrong actions are harmless. Often they are a waste of money. Potentially they could harm and kill people.

The big problem is that data scientists are not aware of their bias. They won’t admit their bias because they are too proud.

The AI risk is incompetence will build AI that makes big mistakes.

An expert system called Cyc started learning in the mid 1980’s. It currently has over 100 apps running in the real world. The author of the article says that computers don't have any common sense. This is exactly one of the main goals of the project. According to Wikipedia “The Cyc knowledge base of general commonsense rules and assertions... grew to about 24.5 million items in 2017. I highly recommend the entire Wiki article on just how sophisticated a common sensible computer can be after 33 years of development.

https://en.wikipedia.org/wiki/Cyc

Neural Networks, while capable of doing amazing feats far surpassing humans in raw speed and volume are essentially one trick wonders and I would put them in a separate niche from a general purpose AI line a Watson. Watson could fill several volumes by itself but if it wanted to destroy the human race it doesn't have the capacity to do so. Yet.

With IBM's Watson we are now getting close to the self-aware AI. I believe that the eventual integration of an artificial central nervous system will lead to a hypothetical machine I'll take the liberty if calling Holmes.

Right now, AI’s are learning how to do things like cooking by watching Youtube videos. Holmes will have the capacity to watch every Internet video, every IPTV channel and webcam and the AI’s independent learning will take off exponentially. Holmes is now seeing the world through a billion eyes and the addition of autonomous mobile sensor platforms will allow him (it) to roam freely and experience reality in physical form.

Holmes becomes an intelligent entity. I believe such an entity will attempt to function in it's best interest and, rather than eliminating humans it will strive to utilize the non-logical reasoning and creativity of human wetware as a resource, a form of right brained counterpart to the Holmes left brain. The result will be Human - AI - Neural Net collaboration as the whole is indeed greater than the sum of it's parts.

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.