Posted on 09/13/2014 5:25:20 AM PDT by RoosterRedux

In Frank Herbert's Dune books, humanity has long banned the creation of "thinking machines." Ten thousand years earlier, their ancestors destroyed all such computers in a movement called the Butlerian Jihad, because they felt the machines controlled them. Human computers called Mentats serve as a substitute for the outlawed technology. The penalty for violating the Orange Catholic Bible's commandment "Thou shalt not make a machine in the likeness of a human mind" was immediate death.

Should humanity sanction the creation of intelligent machines? That's the pressing issue at the heart of the Oxford philosopher Nick Bostrom's fascinating new book, Superintelligence. Bostrom cogently argues that the prospect of superintelligent machines is "the most important and most daunting challenge humanity has ever faced." If we fail to meet this challenge, he concludes, malevolent or indifferent artificial intelligence (AI) will likely destroy us all.

(Excerpt) Read more at reason.com ...

The question is whether or not ‘intelligent machines’ can become sentient.

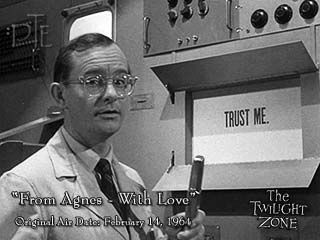

Science Fiction has does some important up-front thinking about the implications and people need to start paying attention, because the day when it matters is either here now, or just around the corner.

The Butlerian Jihad in Dune is an interesting example.

The Turing Police in William Gibson's sprawl series is also important. Will international police forces track down and destroy artificial intelligence? Or will that not happen? Either way, the implications are very significant.

The key question for all of this is always the Freedom Question: should you be free to make these choices? Or are we better off with really powerful forces making sure that these choices are not allowed? Isn't that what everything always comes down to? And as we know, different people always line up on one side or the other when it comes time to choose.

I think there is an eventual real risk, if machines become intelligent enough and capable of acting, that they will compete with us.

Actually I think Issac Asimov lays out a more realistic scenario in “Inferno” with a character asking himself who is the slave.

After generations of being dependent on robots people lived their lives according to how the robots would react within the 3 laws. The robots still took orders from people as long as they didn’t conflict with the 3 laws but the humans also avoided danger, bad eating habits, and other risky behaviors because the robots couldn’t allow them.

I look at cars today and all the technology that goes into them and I see a lot of younger people who can’t drive safely without that technology.

I wonder about the kids of today who are increasingly dumbed down by technology. They believe there will eventually be an app for everything in life so that they can just give the command and their wish will be granted.

It seems to me that as machines become smarter, humans will naturally become less intelligent in their dependence on them.

Given that, I suspect that a new luddite movement will pop up soon (and go off the grid). And for the first time in my life, I might be sympathetic to it.

Superintelligent? My iPhone is smarter than a lot of people out there.

Heck, my dog is smarter than some people I know...

Make that sub intelligent subhumans.

Having a human become emotionally attached to a sentient (maybe not the right word) computer program was fascinating. If that ever becomes possible, and I think it will, it could mean the end of personal relationships and ultimately the human race........

Ask someone under thirty years old to read a map...

There are lots of young people who if you asked them directions how to get to their job via street names and such, they could not tell you even though they drive it everyday...

They just follow their GPS...

Unintelligent human beings will be our destruction.

Sentience does not imply intelligence. Also, super-intelligence machines could develop neuroses or depression.

This I think may not be possible.

Consider that the naturally occurring humans of superior intelligence frequently end up as paranoid schizophrenics such as Uni Bomber Ted" Kaczynski

The architecture of the human brain may not permit the super intelligent to occur without adverse effects. Even the successful super smart in history have often had difficulties in everyday society. Nokola Tesla died alone and in poverty.

The human brain may simply be limited to average thinking by its design.

This is just another science fiction fantasy, and it’s been around for years. The reality is that even simple organisms often have hundreds of millions of years of natural selection evolution backing them up.

And not just individually, but collectively.

Yes, people can design machines that can kill, and quite effectively. But this is no different from them using simple tools to kill. It is still “all them” doing the killing.

Imagine Obama claiming credit for a drone zap. It’s not really the drone, or the munition it drops that is doing the killing. And no, it isn’t Obama either. It is some guy in Utah directing the drone in Pakistan. As well as the guys in Afghanistan who maintain it, and the people back in the US that make it.

Arthur C. Clarke’s third law: “Any sufficiently advanced technology is indistinguishable from magic.”

I would add the axiom: “But ‘familiarity breeds contempt’ applies to magic as well.”

I think it will fail because intelligence always seems to lean more towards breaking down than functioning properly. The real problem would be “insane thinking” in these machines. As we have seen, more than half of so called “intelligent” beings today are completely out of their minds which is how a foreign born radical Muslim won the Presidency twice. I mean let’s think how insane that is - We were attacked by radical Muslims that killed 3000 people and people elect one into the White house not once but twice? That’s over 50% of “intelligent minds” that have broken down and failed completely and we think we can create minds better than that using computer chips when we can’t understand the first thing why human brains consistently fail most of the time?

Just wait until they follow their GPS into a dangerous neighbor filled with Trayvons.

There's a Darwin Award awaiting them.

When they start falling in love with humans, then the real problems will start.

Have you seen the movie IDIOCRACY? Hilarious, and way-too-true.

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.