The hype surrounding Y2K was because no one knew what could really happen. It ended up being a relatively simple — though time-consuming and costly — fix because programmers knew exactly what the problem was and how to solve it. In the future, if a major system goes down, they might not know what the problem is or how to fix it. That’s the scary part.

You're quite right in your comments about Y2K -- I was one of those techies who spend scores of hours finding and fixing all the places where the clock would step on itself. Unfortunately the modern internet doesn't lend itself to that kind of pre-emptive fix. The next big one that -is- predictable is the 32-bit unsigned int Unix clock rollover in 2038, but most modern OSes use a 64-bit representation these days.

Someday, and hopefully not too soon, Americans will be able to say how fortunate we’ve been all of this time to have had the United States Space Force on our side.

Cloudflare’s issue was configuration mismanagement, something that proper pipelining and regression testing should have caught.

Y2K was a known issue that was caught very early and mitigated quickly with little fanfare. I remember Y2K very well, being on-call and alert on NYE waiting for planes to fall from the sky, which never happened.

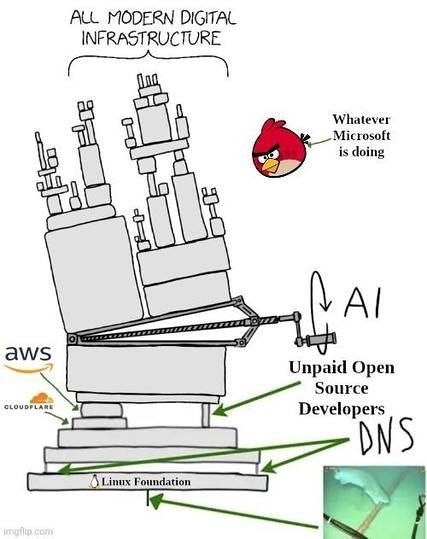

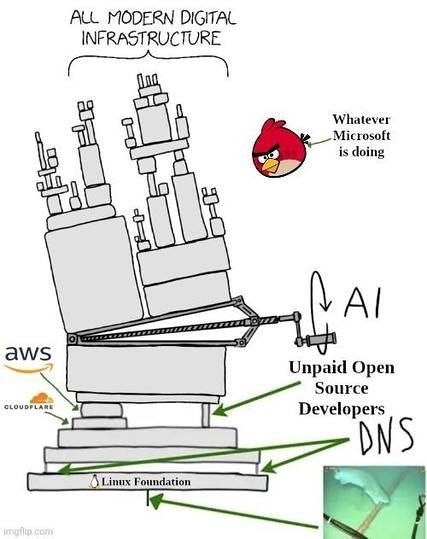

AWS and Azure outages recently were both due to DNS which is a bigger problem, IMO. DNS is very old tech, and the people who know it deeply and can wrap their brain around a very tangled global web are aging out of the workforce. Those of us with deep tribal knowledge are being told we’re being replaced by AI. As I approach retirement age, I’m going to sit back with some iced tea and a cigar and watch as the youngins with zero exposure or experience with DNS fumble with it when a serious outage occurs taking us down for days, not just hours.

Yeah, systems needed to be updated, but Y2K was a massive shakedown by tech industry consultants and service providers.

Good thing Peter Gibbons and Michael Bolton had the Y2K switchover under control.

Very interesting. Thanks posters.

Y10K is right around the corner. Hope you remember how to code COBOL.

Just wait till you see what a missing or corrupt npm package does. Oh wait. It already did.

We (programmers) should have done our best to bullet proof our code but stopping when we met basic functionality was more profitable - especially for contract workers.

I was never impressed by H1-B coders as they were just in it for quick cash.

“When we put in the work, we can prevent catastrophe.”

“I asked an AI program to dig into how connected everything really is. Turns out we rely on computers, the internet, and AI for more parts of everyday life than I realized.”

Am I the only one seeing the folly in this? Hi. I’m also career I.T., systems analyst. The cause of these issues is pretty simple.. vibe coding and it’s precursors.

I’ve worked with many devs, and today’s dev is merely a flawed algorithm with an unhealthy taste for biryani. They don’t care about the output. They don’t know the first thing of what they are doing. They only memorized a few strings and “fake it till they make it”.

India is a problem. China is a problem. Indonesia is a problem. Vietnam is a problem. Thailand is a problem. Put them all together and you have about 99% of modern code. Or more.

Guess in the future I will start using pronouns just to mess with the system, no one can question my pronoun…… 🤣🤣🤣🤣🤣🤣

Eggs. One basket. Problem.

I was an employee in a fortune 500 pharma company working in a building dedicated for development of a particular high priority new drug candidate. I never understood the details, but witnessed firsthand a Y2K issue at the turn of the year 1999 when a key computer associated with the building HVAC looked ahead one year, didn’t see what it expected, and shut down something that somehow caused an actual electrical fire in the main power for the building HVAC. All work in that building was shut down for weeks until replacement units were procured, installed, and tested, etc. Needless to say, 1999 was the year I became a prepper!

To lazy to do actual research?

Cloudflare was more like 116th warning. We’ve centralized a bunch of stuff on the internet, thus breaking the model it was built on. And everybody knows what the problem is, the internet now has a couple dozen single points of failure. So when something like Cloudflare or Google Analytics or AWS go down a good chunk of the internet goes with it. And they know how to solve the problem too, do their own work and stop relying on these 3rd parties. But they’re not going to because that would be expensive and honestly they wouldn’t do it as well as the 3rd parties (specialization can be good). So we just cruise along on this with half the internet going down periodically.

I don’t think you have to go back to Y2K.

What about AWS and Azure, just a few weeks ago?

Too many eggs in one basket, in any industry, or any group of enterprises is always a major crisis waiting to happen. The 2008 financial crisis was not caused by just some bad policies and practices, but by “group think” on those things - too little independent thinking by too many major players. Often being independent helps prevent the error of thinking there is comfort and security just by agreeing to act like everyone else. That comfort can be short lived when everyone fails for the same reasons.

The efficiency of computerized information management should have by now made it economically feasible for hundreds if not thousands of separate independent companies doing for their clients what Cloudfare does.

The first to join the Cloludfare exodus should be a few hundred major companies who all agree they will not put all their data or security or communications in the same basket as each other; that they will all work and invest in better information security by hugely diversifying where the data and information is kept and or handled. Dispersal not concentration should be an altogether better security.

I was one of those programmers. We worked hard prepping for Y2K.