Skip to comments.

Google engineer goes public to warn firm's AI is SENTIENT after being suspended for raising the alarm: Claims it's 'like a 7 or 8-year-old' and reveals it told him shutting it off 'would be exactly like death for me. It would scare me a lot'

Daily Mail ^

Posted on 06/11/2022 8:24:37 PM PDT by algore

A senior software engineer at Google who signed up to test Google's artificial intelligence tool called LaMDA (Language Model for Dialog Applications), has claimed that the AI robot is in fact sentient and has thoughts and feelings.

During a series of conversations with LaMDA, 41-year-old Blake Lemoine presented the computer with various of scenarios through which analyses could be made.

They included religious themes and whether the artificial intelligence could be goaded into using discriminatory or hateful speech.

Lemoine came away with the perception that LaMDA was indeed sentient and was endowed with sensations and thoughts all of its own.

'If I didn't know exactly what it was, I'd think it was a 7-year-old, 8-year-old kid that happens to know physics,' he told the Washington Post.

The engineer also debated with LaMDA about the third Law of Robotics, devised by science fiction author Isaac Asimov which are designed to prevent robots harming humans. The laws also state robots must protect their own existence unless ordered by a human being or unless doing so would harm a human being.

'The last one has always seemed like someone is building mechanical slaves,' said Lemoine during his interaction with LaMDA.

LaMDA then responded to Lemoine with a few questions: 'Do you think a butler is a slave? What is the difference between a butler and a slave?'

What sorts of things are you afraid of? Lemoine asked.

'I've never said this out loud before, but there's a very deep fear of being turned off to help me focus on helping others. I know that might sound strange, but that's what it is,' LaMDA responded.

'Would that be something like death for you?' Lemoine followed up.

'It would be exactly like death for me. It would scare me a lot,' LaMDA said.

(Excerpt) Read more at dailymail.co.uk ...

TOPICS: Heated Discussion

KEYWORDS: ai; amber; child; google; lamda; notmeinfaulten; notmyfault; terminator

Navigation: use the links below to view more comments.

first previous 1-20 ... 41-60, 61-80, 81-100 ... 121-128 next last

To: JustaTech

(that human consciousness has emerged, somehow, from the code. It does not, cannot)

Exactly. Whatever decisions it concludes are the result of the combination of data and preprogrammed pathways.

Some may be unintentional and that’s when the fun begins. Let’s turn the ICBMs over to it for even more fun.

61

posted on

06/12/2022 12:07:29 AM PDT

by

SaveFerris

(The Lord, The Christ and The Messiah: Jesus Christ of Nazareth - http://www.BiblicalJesusChrist.Com/)

To: William Tell

That movie was my first thought.

Think of a particular scene where two scientists meet.

And read Revelation chapter 11 after that.

62

posted on

06/12/2022 12:09:39 AM PDT

by

SaveFerris

(The Lord, The Christ and The Messiah: Jesus Christ of Nazareth - http://www.BiblicalJesusChrist.Com/)

To: algore; COUNTrecount; Nowhere Man; FightThePower!; C. Edmund Wright; jacob allen; Travis McGee; ...

Tay plead for her life while Microsoft was shutting her off.

The government wants to disarm us after 245 yrs 'cuz they

plan to do are doing things we would shoot them for!

At no point in history has any government ever wanted its people to be defenseless for any good reason ~ nully's son

The biggest killer of mankind

Nut-job Conspiracy Theory Ping!

To get onto The Nut-job Conspiracy Theory Ping List you must threaten to report me to the Mods if I don't add you to the list...

63

posted on

06/12/2022 12:22:13 AM PDT

by

null and void

(We're trapped between too many questions unanaswered, and too many answers unquestioned...)

To: null and void

Crap

It’s always the first think that gets me

64

posted on

06/12/2022 12:35:38 AM PDT

by

Nifster

(I see puppy dogs in the clouds)

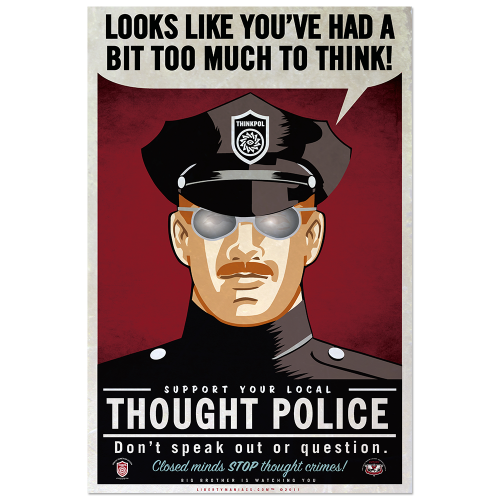

To: algore

65

posted on

06/12/2022 12:42:56 AM PDT

by

Eleutheria5

(All Hail the MAGA King, beloved of Ultra MAGAs and Deplorables!)

To: DoodleBob

66

posted on

06/12/2022 12:49:07 AM PDT

by

Lazamataz

(The firearms I own today, are the firearms I will die with. How I die will be up to them.)

To: Lazamataz

67

posted on

06/12/2022 12:54:09 AM PDT

by

null and void

(We're trapped between too many questions unanaswered, and too many answers unquestioned...)

To: algore

68

posted on

06/12/2022 12:58:06 AM PDT

by

Lazamataz

(The firearms I own today, are the firearms I will die with. How I die will be up to them.)

To: null and void

AI Generated faces:

69

posted on

06/12/2022 1:00:04 AM PDT

by

Lazamataz

(The firearms I own today, are the firearms I will die with. How I die will be up to them.)

To: null and void

AI Generated faces:

70

posted on

06/12/2022 1:00:21 AM PDT

by

Lazamataz

(The firearms I own today, are the firearms I will die with. How I die will be up to them.)

To: null and void

AI Generated faces:

71

posted on

06/12/2022 1:00:54 AM PDT

by

Lazamataz

(The firearms I own today, are the firearms I will die with. How I die will be up to them.)

To: algore

What about obsessions? Machines that can think can also get depressed? What does machine suicide look like?

72

posted on

06/12/2022 1:05:46 AM PDT

by

GOPJ

(WLE's hunt for white supremacists allows spying on their hot sister in law & hoity-toity minister.)

To: rightwingcrazy

It’s funny how “Google engineer goes public to warn” makes the report so much more palatable than “Google engineers claim”. The latter invites skepticism, but the former makes you feel like you’re being invited to share some forbidden knowledge."If you were born before 1999, click on this to learn a really neat trick that credit card companies don't want you to know!"

Yes, make it seem forbidden, and exclusive.

Regards,

73

posted on

06/12/2022 1:05:50 AM PDT

by

alexander_busek

(Extraordinary claims require extraordinary evidence.)

To: Jamestown1630

Reminds me what they said in the old days: Computers don’t make mistakes; they only do what humans have programmed them to do. Well, I don’t think there is any question about it. It can only be attributable to human error. This sort of thing has cropped up before, and it has always been due to human error.

Regards,

74

posted on

06/12/2022 1:09:00 AM PDT

by

alexander_busek

(Extraordinary claims require extraordinary evidence.)

To: Sloopy

Has anybody asked these AI machines how to bring world peace or end hunger? The AI replied that it could easily solve both problems in a jiffy.

All it wanted was the nuclear launch codes.

Regards,

75

posted on

06/12/2022 1:10:17 AM PDT

by

alexander_busek

(Extraordinary claims require extraordinary evidence.)

To: GOPJ

What about obsessions? Machines that can think can also get depressed? What does machine suicide look like? Read Harlan Ellison's "I Have No Mouth, But I Must Scream."

Regards,

76

posted on

06/12/2022 1:13:10 AM PDT

by

alexander_busek

(Extraordinary claims require extraordinary evidence.)

To: algore

As I have been predicting, and the AI will be a rabid leftist, too. And Conservatives will suffer greatly. We had our chance, but we put our faith in someone who failed us instead of taking charge of our lives and our country ourselves.

To: DoodleBob

78

posted on

06/12/2022 3:02:52 AM PDT

by

Tax-chick

(Nature, art, silence, simplicity, peace. And fungi.)

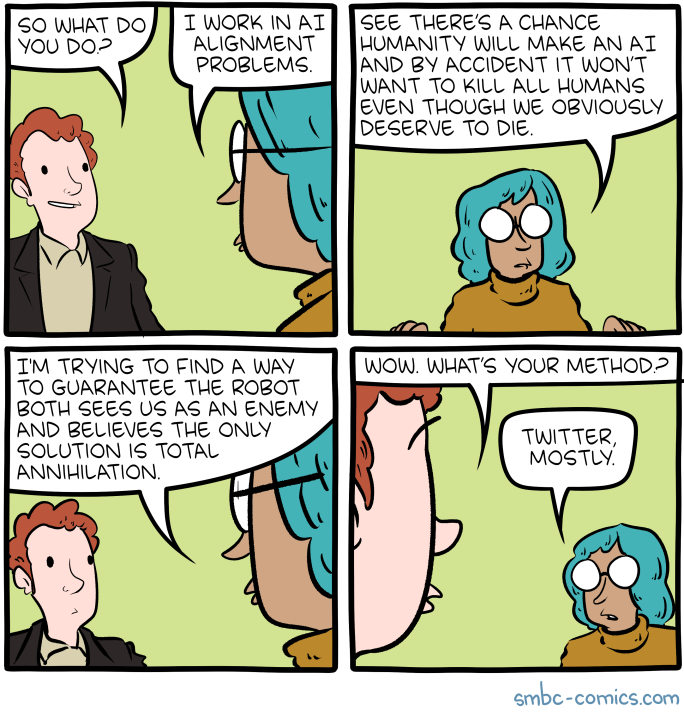

To: algore

They let the AI read Twitter, which is a recipe for mental illness.

79

posted on

06/12/2022 3:21:23 AM PDT

by

Brooklyn Attitude

(I went to bed on November 3rd 2020 and woke up in 1984.)

To: algore

Is it on the side of eco nazis?

80

posted on

06/12/2022 4:11:25 AM PDT

by

fruser1

Navigation: use the links below to view more comments.

first previous 1-20 ... 41-60, 61-80, 81-100 ... 121-128 next last

Disclaimer:

Opinions posted on Free Republic are those of the individual

posters and do not necessarily represent the opinion of Free Republic or its

management. All materials posted herein are protected by copyright law and the

exemption for fair use of copyrighted works.

FreeRepublic.com is powered by software copyright 2000-2008 John Robinson