Posted on 10/13/2009 9:06:08 PM PDT by neverdem

Researchers from the US and Russia have shown how it is possible to measure the diode properties of a single molecule and how the orientation of the molecule between two electrodes can be controlled. The findings are a significant advance in the expanding field of molecular electronics, which seeks to construct electronic circuits from molecular-scale components, opening the prospect of a new generation of devices that are immensely powerful and efficient yet tiny.

Diodes act as electronic 'check valves' in a circuit, permitting current to flow in one direction only through a process known as rectification. In a molecular circuit, single molecules acting as diodes would form a key component, and ensuring that the diode faces the right way is crucial.

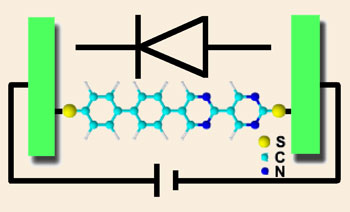

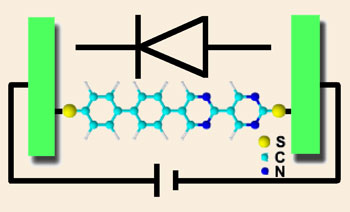

Ismael Díez-Pérez from Arizona State University and his colleagues selected as a candidate molecular diode an asymmetric linear molecule consisting of a pair of pyrimidinyl rings covalently linked to a pair of phenyl rings. The bipyrimidinyl moiety is electron-deficient, while the biphenyl block is electron rich.

To measure the diode properties of the molecule the researchers attached either end to a gold electrode. One electrode consists of a flat gold substrate while the other is the gold-coated tip of a scanning tunnelling microscope (STM). The link between the molecule and the gold is made through a thiol group. To ensure that the molecule is placed in the correct orientation, the thiol group at one end of the molecule is protected by a cyanoethyl group, while the other end is protected by a trimethylsilylethyl group. The molecule is first exposed to the substrate and one of the protecting groups removed so that only one end of the molecule attaches; once in place the second protecting group is removed to enable attachment of the STM tip to the other end of the molecule.

|

The team used an asymmetric molecule as to act as the diode, and attached each end to a gold electrode to measure its conductance

© Nature Chemistry

|

Measurements of current at the STM tip showed both that contact was made with a single molecule and that the molecule caused a significant rectifying effect.

According to team member Nongjian Tao, 'A general implication is that any molecule with asymmetric electronic properties should have a rectification effect.'

Another member of Díez-Pérez's team, Luping Yu, adds, 'The molecules are designed such that we can sequentially assemble them in a controlled direction. It is like a physical diode device [where] you have mark one end as positive another negative. The difference is that we used chemistry to do that.'

Richard Nichols, an expert in molecular electronics at the University of Liverpool in the UK, is impressed by the study. 'It is a very nice piece of work,' he says. 'They have used a combination of theory, STM and single molecule measurements and have found a way to orient an asymmetric molecule at the junction. Using this strategy it is possible to place things the right way up within a molecular circuit, which is a key consideration.'

Wow - The level of miniaturization possible with a single-molecule diode is staggering: a transistor is two diodes with a joined contact (NPN or PNP depending on which contacts are joined) & the transistor is the basis for our digital-logic (computing) devices.

I’d imagine that we’d see something as powerful (computing-wise) as the laptop I’m using in something the size of a normal wristwatch... and something with the power of a top-line desktop in one of those old-style calculator/digital-watches.

bump

I think we’d see your prediction even without this breakthrough.

Very cool. This is probably how transistors started out. If this works out we’ll have computers in wristwatches.

Could these be used for artificial intelligence implants?

No matter what you do, Liberals cannot be made intelligent.

Hm, I’m not sure there. The current chip-size, 40 nano-meters (IIRC) seems to be a sort of manufacturing wall right now... and with super-molecular sized tech there is a limit on how close you can cram things before electrons decide that the traces are close enough to jump to. (Actually it will exist in molecular-scaled tech as well, but the distances will also be reduced correspondingly.)

(remember that they follow the path of least resistance... so, if the resistance between two physical connections varies enough, as a long transistor-pipline might become, the pins of the physical chip might become something of a semi/psudeo-capacitor depending on what instructions are being processed.)

The benefit I see in molecular electronics would be to enable the design of more powerful and faster complex devices (such as CPUs and memory) and it does not necessarily follow with similar scaling of interface devices or connecting circuitry. The things that make a computer work would probably fit inside a watch case now. The limitations are with the things that allow a human to use it, and the things that hold it together and/or make it possible for humans & machines to assemble them.

Of course advances in those things are taking place but they are completely different branches of research.

Rather than use the super miniaturized paradigm for new scale reduction technology, I prefer to think in terms of more power packed into the standard handheld & laptop sized devices we already use today. I and many users would have no interest in interacting on a human basis with a wristwatch sized device. The ergonomics are just too painful to contemplate. Blackberries and cell phones are small enough already, and I personally prefer a device with a full sized keyboard so I don’t have to type with my thumbs, or God forbid a stylus. Imagine the power & processing capability a small laptop sized device could pack with this molecular scale technology at its foundation :)

You beat me to a post on the human factors pain involved in interacting with a super miniaturized computer by about 30 secs :) Computers are small enough already - make them more powerful!

That is pretty darned wild.

Tell you one thing, this isn’t a one-or-two-guys-working- in-their-garage project, he said, invoking the larval H-P.

Source Drain Gate

I suppose there might be room in some lives for a Blackberry sized real computer, but the human interface would need to be improved a lot before I would want to use one much at all.

Keyboards and mice are soon to be as outdated as hand cranking your car engine, with a handle sticking out the radiator, to start it.

Think iphone

Touch screens and voice comands are the future. They already make laptops with touchscreens(makes a mouse and touchpads unnecessary)

Pretty soon they will start housing the computer behind the screen instead of under the keyboard. Then the keyboard will be a thin flimsy cover for your screen that will fold backwards out of the way. eventually they will eliminate the keyboard all together. when you really need to type, there will be the option of a keyboard simulator on the screen.

40nm is yesterday’s news. they are doing 32nm now and intel will be selling them to the public sometime next year. Two years after that will be 22nm. Yes, they do seem to me to be hitting a wall, but they also seem to be finding ways around that wall. Time will tell.

None of this matters, however, as long as we are stuck with crappy operating systems. Windows sux bad. This is my second attempt at typing this post. In the middle of the first attempt, my windows(vista) decided to restart my computer to reconfigure updates that I never authorized. It never asked my permission to shut down either. And it didn’t save my internet connection or restore it after shutting down. there was no warning. There was no “click here to procede”. THere was just *BAMO* shutting down NOW.

What a pile of junk!

Thank god all I was doing at the time was posting on FR.

Think speech to text, and other improvements like an ability to scroll and otherwise adjust/manipulate the display, clipboard, etc. and take other input by reading eye or facial gestures, then you'll have something. That's all doable now but the computing power to do all that won't fit in a PDA (yet).

Operating systems is another thing as you say and of course they will need to accommodate new human interfaces for the yet to come tiny PC. But that's a separate issue from the things they seem to do that we don't like. And in that regard Vista seems like the second coming of Windows ME. My wife has it on her laptop and when it gives her trouble she comes to me but dang, things I can fix in a jiffy with XP take a lot more time on her machine. Meantime my biggest complaint with XP is it goes swappy too quick (i.e. does not release unused memory as it should) but my experience with Linux so far is even worse and I have not yet tried OS-X. Maybe WinSeven will fix those things and I'll probably try it on the netbook at least - people seem to be liking what it does on the Intel Atom - 945 chipset combo.

>40nm is yesterday’s news. they are doing 32nm now and intel will be selling them to the public sometime next year. Two years after that will be 22nm. Yes, they do seem to me to be hitting a wall, but they also seem to be finding ways around that wall. Time will tell.

Ah, granted; I haven’t been keeping overly [or overtly] up-to-date with everything at the moment; I’m trying to graduate in December. But you do seem to agree that the physical limitations of current techniques is looming and that time will indeed tell. (Oh, and when I mentioned the size reduction I wasn’t mentioning interfaces, just the underlying hardware.)

>None of this matters, however, as long as we are stuck with crappy operating systems.

Now you’re talking on a topic that I find near & dear; I too am disappointed in the state of Operating Systems, and being a CS major I hope to develop/write a good OS.

>Windows sux bad.

The main goal of the OS is to make available the resources of the computer to the [end-]user; Windows -would-you-like-to-continue- Vista is a HUGE step backwards in usability from XP... in fact, I’d say that 98SE kicks its ass in the usability department.

>This is my second attempt at typing this post. In the middle of the first attempt, my windows(vista) decided to restart my computer to reconfigure updates that I never authorized.

I love how every update, it seems, forces a restart. Honestly, if your objects representing services were actually interfaces all you would have to do to update is 1) flush the service, 2) stop-service/free-object, 3) load the updated object, 4) restart the service... because you were using an interface hiding the implementation that didn’t change there is no need to change other components in the system.

>It never asked my permission to shut down either. And it didn’t save my internet connection or restore it after shutting down. there was no warning. There was no “click here to procede”. THere was just *BAMO* shutting down NOW.

That sucks, yeah I do hate that. I do think that using a sane programming language [in OS dev (NOT C/C++)] would go a long way to fixing bugs/instabilities/security-vulnerabilities; the buffer overflow error/exploit you hear about is the result of using a language that doesn’t do bounds checking on arrays.

A medium sized laptop screen can display a full sized keyboard. tactile feedback is a problem, but I think it is a minor problem considering that typing will not be a major issue in the future. If for some reason you do need to do hours or days worth of typing, you can always get a real keyboard and add it...via wireless communication.

I’m picturing computers being as common, cheap, and numerous as forks, sunglasses, and keychains. I see them hanging on hooks at the checkout counter at every corner quickimart. I’m thinking an entire computer on one single chip...CPU, GPU, RAM, solidstate hard drive, sound card, modem, camera(s), microphone(s), bluetooth, infrared, GPS, radio, TV, shortwave, CB, wireless internet, and even satphone capability built into it. Antennae external of course...connected wirelessly...every computer capable of communicating with every other computer without wires. set two of these iphone-like computers side-by-side and they can act as one dual CPU/socket computer. Need a super computer to beat all supercomputers?...fill a garbage can up with brand new iphone-like computers from the local quickimart @ 40bucks a pop.

If you are still intent on typing, why not position 2 of them so that one is under each hand on your coffee table and have half a typwriter keyboard simulated on each touch screen? In fact, why even set the things on a table? why not attach them to a wrist clip of some kind so you can type while your arms are hanging at your side and a third iphone-like computer is attached to a visor in front of your face that displays what your are typing?

if we imagine even a little further into the future...touch screens will even be old fashioned.

Holographic projection.

Images floating in the air in front of you that only you can see with your special sunglasses on. If you really really feel the need to type, there can be a program that produces a holographic pair of human hands typing away on an antique underwood typewriter that respond to nerve signals in your finger muscles picked up by your iphone-like computer in your back pocket.

happy now?

I’m thinking that one day these single chip computers will be “printed out” by a machine that resembles, in concept, an inkjet printer(putting material down instead of the current method of etching material away from a wafer)...and that these injet printer heads will be fabricated automatically by some kind of computer controlled machine that somewhat resembles a state of the art rapid prototype machine. Thousands produced per second, 24 hours per day, 365 days per year.

When there’s a few billion of them, they can be put to work like the SETI program to solve serious problems. maybe set them to work on a massive supercomputer design. maybe set them to work on how to convert the earth to a computer, or the moon. the possibilities become rapidly infinite...or at least way way way beyond my grey matter if not beyond every other human on the planet. This is where the concept of the technology singularity comes from.

If you want to scare yourself silly, goto wiki and do a search on “technological singularity”, then read some more on their footnotes and references. Then use that to fuel your own perverse imaginations. You will have dreams that you don’t want.

Terminator movies, star trek’s the “borg”, and matrix movies start to make sense. Real life honest-to-goodness-in-our-lifetime sense. Of course we could destroy our economy first and end up in a mad max reality before the technological singularity happens. I guess that would be the best case scenario?

Of course.

But first we need to invent AI implants. Cart before the horse situation as I see it.

98SE is the last windows i bought on a CD as a full install stand alone CD. I’m really hoping that windows7 will inspire me to buy a full install disk. I’m also really hoping that the goons at MS will get a clue and drop prices. Their lawyer fees around the world will go down dramatically if they just drop the dam prices. fifty bucks to buy windows and no one will give a crap about anything. They will just throw 50dollar bills at MS for all kinds of stuff.

Oh, I need to add email capability for each and every computer in my 30 story office builiding?...k no prob, here’s 30 grand now make sure it works. Here’s another 10grand for insurance and future upgrades.

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.