Posted on 05/04/2017 4:07:14 AM PDT by LibWhacker

“See you in the Supreme Court!” President Donald Trump tweeted last week, responding to lower court holds on his national security policies. But is taking cases all the way to the highest court in the land a good idea? Artificial intelligence may soon have the answer. A new study shows that computers can do a better job than legal scholars at predicting Supreme Court decisions, even with less information.

Several other studies have guessed at justices’ behavior with algorithms. A 2011 project, for example, used the votes of any eight justices from 1953 to 2004 to predict the vote of the ninth in those same cases, with 83% accuracy. A 2004 paper tried seeing into the future, by using decisions from the nine justices who’d been on the court since 1994 to predict the outcomes of cases in the 2002 term. That method had an accuracy of 75%.

The new study draws on a much richer set of data to predict the behavior of any set of justices at any time. Researchers used the Supreme Court Database, which contains information on cases dating back to 1791, to build a general algorithm for predicting any justice’s vote at any time. They drew on 16 features of each vote, including the justice, the term, the issue, and the court of origin. Researchers also added other factors, such as whether oral arguments were heard.

For each year from 1816 to 2015, the team created a machine-learning statistical model called a random forest. It looked at all prior years and found associations between case features and decision outcomes. Decision outcomes included whether the court reversed a lower court’s decision and how each justice voted. The model then looked at the features of each case for that year and predicted decision outcomes. Finally, the algorithm was fed information about the outcomes, which allowed it to update its strategy and move on to the next year.

From 1816 until 2015, the algorithm correctly predicted 70.2% of the court’s 28,000 decisions and 71.9% of the justices’ 240,000 votes, the authors report in PLOS ONE. That bests the popular betting strategy of “always guess reverse,” which has been the case in 63% of Supreme Court cases over the last 35 terms. It’s also better than another strategy that uses rulings from the previous 10 years to automatically go with a “reverse” or an “affirm” prediction. Even knowledgeable legal experts are only about 66% accurate at predicting cases, the 2004 study found. “Every time we’ve kept score, it hasn’t been a terribly pretty picture for humans,” says the study’s lead author, Daniel Katz, a law professor at Illinois Institute of Technology in Chicago.

Roger Guimerà, a physicist at Rovira i Virgili University in Tarragona, Spain, and lead author of the 2011 study, says the new algorithm “is rigorous and well done.” Andrew Martin, a political scientist at the University of Michigan in Ann Arbor and an author of the 2004 study, commends the new team for producing an algorithm that works well over 2 centuries. “They’re curating really large data sets and using state-of-the-art methods,” he says. “That’s scientifically really important.”

Outside the lab, bankers and lawyers might put the new algorithm to practical use. Investors could bet on companies that might benefit from a likely ruling. And appellants could decide whether to take a case to the Supreme Court based on their chances of winning. “The lawyers who typically argue these cases are not exactly bargain basement priced,” Katz says.

Attorneys might also plug different variables into the model to forge their best path to a Supreme Court victory, including which lower court circuits are likely to rule in their favor, or the best type of plaintiff for a case. Michael Bommarito, a researcher at Chicago-Kent College of Law and study co-author, offers a real example in National Federation of Independent Business v. Sebelius, in which the Affordable Care Act was on the line: “One of the things that made that really interesting was: Was it about free speech, was it about taxation, was it about some kind of health rights issues?” The algorithm might have helped the plaintiffs decide which issue to highlight.

Future extensions of the algorithm could include the full text of oral arguments or even expert predictions. Says Katz: “We believe the blend of experts, crowds, and algorithms is the secret sauce for the whole thing.”

I’m sure some FReepers could beat 75 percent by just knowing who the current SC justices are.

Crap in, crap out.

AI has to start with baseline assumptions.

Those assumptions are only valid if one tells the truth .

No way it accounts for blackmail.

Nor fake resumes being conservative up to the time of appointment, like Souter or Kennedy.

Fake news forme “Science”...furthermore, Science is a lefty mag and anything they cover will be lefty scientists with lefty assumptions to feed their AI algorithms.

Can it account for chaos theory like butterfly wings or Roberts’ blackmail?

Nope.

ROFL!!!!

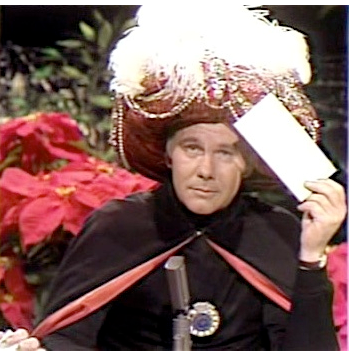

Funniest regular skit on late night talk show ever.

LOL. Since Supreme Court decisions are “supposed” to be based on the Constitution, I would expect a computer model to “close” to a specific model, i.e. the Constitution.

It could have been easily included in the analysis. I can only conclude it was found not to have been important. The analysis will tell you whether or not a proposed variable is or is not important enough to include in the model.Nor fake resumes being conservative up to the time of appointment, like Souter or Kennedy.

Again, that variable could easily be tested for its importance and then be included or excluded from the model. If I were doing it, I would definitely test for it to see if it was important. But if it weren't, I wouldn't try to force it. I could see this one going either way.Can it account for chaos theory like butterfly wings or Roberts’ blackmail?

Are butterfly wings important?No. Don't even have to do an analysis of that one.

Can it account for chaos theory like butterfly wings or Roberts’ blackmail?

Are butterfly wings important?

No. Don’t even have to do an analysis of that one.

.....

Weather prediction in chaos theory mentions as an example “butterfly wings”; this wasn’t meant literally but as a for instance...

I can’t stay in the 100% literal world and be a scientist as I’d miss sarcasm and be a social outcast.

I know enough about programming and AI and modeling and human behavior to know we can’t mimic it 100%.

Blackmail and fake resumes aren’t really necessary. The problem is the monopoly of legal theory taught in law schools. There is no source for lawyers with historically traditional views, and certainly no reservoir of them in the federal judiciary.

Federal courts, including the SC, need to be reined in by dramatic legislative action. That’s the only way forward from here.

,,,,,, not hard to second guess the 9th court of circuit appeals . You won’t need artificial intelligence for that .

Crackpot algorithms for crackpots on the court.

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.