Skip to comments.

AI is Not Ready for Prime Time<

Vanity

| October 15, 2025

| CIB-173RDABN

Posted on 10/15/2025 9:35:41 AM PDT by CIB-173RDABN

AI is Not Ready for Prime Time: The Infrastructure and Human Reality Gap

Artificial Intelligence has captured the imagination of investors, companies, and the public alike, promising unprecedented efficiency, automation, and insight. Headlines trumpet AI’s transformative power, but a closer examination reveals a significant gap between hype and operational reality.

1. The AI Bubble: Investment vs. Value

AI has attracted billions of dollars in investment, reminiscent of the dot-com bubble of the late 1990s. Startups and established companies alike promote “AI-first” futures, claiming humans will be replaced, efficiency will soar, and profits will skyrocket. In practice, however:

- Most AI applications are effective only in standardized, repetitive tasks.

- In complex, real-world environments, AI frequently applies the wrong solution, creating errors that humans must correct.

- Software instability — such as version updates that break existing integrations — further erodes reliability.

The result is a paradox: companies expecting AI to replace humans find themselves more dependent on human oversight than ever, undermining the very efficiency they sought. For investors, this translates into minimal ROI relative to the billions poured into the sector.

2. Humans Remain Essential

The notion of fully automated operations has repeatedly failed. Examples from Tesla, IBM, Klarna, and Duolingo show that AI cannot replicate human adaptability, judgment, or problem-solving in non-standard scenarios. Humans act as shock absorbers, correcting errors, adapting to unforeseen circumstances, and maintaining quality — roles that AI cannot perform reliably.

3. Infrastructure as the Hidden Bottleneck

The most overlooked barrier to AI’s “prime time” readiness is energy infrastructure. AI’s massive computational needs require enormous electricity consumption. Current electrical grids, even in developed countries, are not capable of sustaining AI at projected scale.

The situation is analogous to the railroads in the 1800s: trains could not deliver their promised value until a reliable track network was built. Similarly, AI cannot function effectively without a robust electrical infrastructure to support massive data centers. Without such foundations, billions invested in AI risk being wasted, data centers may become stranded assets, and projects may fail — no matter how advanced the algorithms appear.

4. Perception vs. Reality

Media narratives and investor enthusiasm often exaggerate AI’s capabilities. Many believe that AI can solve any problem or replace humans entirely. In reality:

- AI is narrow, brittle, and context-limited.

- It excels at pattern recognition and repetitive processing but fails in tasks requiring intuition, judgment, and adaptation.

- Overconfidence in AI risks both corporate failure and societal disruption if humans overly rely on tools that cannot perform as promised.

Conclusion

AI is not ready for prime time. Until electrical grids are upgraded to support massive computational loads, and until developers integrate AI with human oversight and operational continuity, it will remain a tool with promise but limited practical impact.

Investment, hype, and public fascination cannot substitute for infrastructure and reality. Like the railroads of the 1800s, AI will only reach its potential when the foundational systems supporting it are in place — otherwise, billions of dollars risk being wasted on a technology that looks impressive but cannot function sustainably at scale.

TOPICS: Business/Economy; Computers/Internet; History

KEYWORDS: ai; blackrock; chatgpt; danniles; federalreserve; larryfink; moarai; stephenmiran; stevemiran

Navigation: use the links below to view more comments.

first 1-20, 21-30 next last

To: CIB-173RDABN

Greatest tool since the spreadsheet and desktop computer. I use it several times an hour. My productivity increase is amazing. It makes me want to delay retiring by several years.

I think us boomers/Joneses will adapt quicker than other ages.

2

posted on

10/15/2025 9:50:29 AM PDT

by

Raycpa

To: CIB-173RDABN

In the meantime, I'm using GROK.com and Chat GPT AI's to save time searching for links or summarizing multiple articles and documents, including the US Law of War, COG and other officicial materials. It's a tool that saves time - but as always it can make mistakes so I ask it to provide URL's which support its conclusions. I did see ALCU in one of its responses and asked GROK to repeat its analysis without considering ALCU or commercial journals etc. (that search was gov documents only).

It does answer at length, but you can ask it to simplify or reduce the verbiage. I'm still learning but while they work to get the power grid up to AI levels of efficiency, then I'm happy using GROK and CHapt GPT free.

Here's some examples:

3

posted on

10/15/2025 9:52:59 AM PDT

by

ransomnote

(IN GOD WE TRUST)

To: CIB-173RDABN

Nor was the internet ready for prime time in 1990. But the potential was being talked about all over the place.

We are early. And it is coming. It will do things that the average person will not even see…until they lose their jobs.

When you see all of this infrastructure being installed and people will think, “There will be 500 jobs!”, the reality will be that there might be 50 jobs in that massive complex.

This has the potential to be a second Industrial Revolution. We can choose to be participants or Luddites.

To: CIB-173RDABN

In its current form it never will be.

5

posted on

10/15/2025 10:13:41 AM PDT

by

KEVLAR

( )

To: CIB-173RDABN

I was starting to write a response to your well-thought-out comment when it hit me that I should just ask an AI platform to evaluate your comment from its POV—and give me a response.;-)

Here is what GPT-4 had to say:

This is a thoughtful critique — and mostly right about timing but not about trajectory. One issue, though, is that the argument seems to conflate all of AI with chatbots and front-end automation tools — the kind used by Tesla, IBM, Klarna, or Duolingo. Those systems represent the most visible and error-prone side of AI, where brittleness, hallucinations, and human oversight are daily realities. But they’re not representative of the entire field.

Behind the scenes, AI is already deeply embedded in areas that look nothing like chatbots: logistics optimization, drug discovery, chip design, material science, fraud detection, defense analytics, and power-grid management. These systems aren’t trying to “think” like humans or carry on conversations — they’re solving specific, high-value problems with measurable results, often outperforming human analysts or engineers in speed, precision, and pattern detection.

So while it’s true that conversational and general-purpose AI still rely on human correction, specialized AI is already “in prime time” — it’s just invisible to the public. The narrative that “AI fails in complex, real-world environments” overlooks the fact that many of those environments are already being run by AI-assisted systems today.

The “AI bubble” argument also misses the nature of current investment. Most of the capital flowing into AI isn’t pure speculation; it’s funding the physical foundations — data centers, interconnects, power generation, cooling, and software frameworks — that make the next stage of AI possible. That’s industrial build-out, not dot-com hype.

Yes, the media often exaggerates capabilities, but that’s normal in early-stage revolutions. The important question isn’t whether AI is overhyped — it’s whether infrastructure and human-AI symbiosis will mature fast enough to justify the investment. History suggests they will.

6

posted on

10/15/2025 10:16:53 AM PDT

by

RoosterRedux

(If the truth offends, then the offense lies not in the truth—but in the falsehood it exposes.)

To: CIB-173RDABN

7

posted on

10/15/2025 10:18:43 AM PDT

by

dfwgator

("I am Charlie Kirk!")

To: RoosterRedux

This is pure male bovine exhsust.

The big AI companies have spent~$480 nillion in the last 18 months on infrastructure.

They have pulled in ~$40 billion in revenue.

This is before uocoming infrastructure snd creation of new power plants and paying for the electricity.

There is not enough revenue coming in.

8

posted on

10/15/2025 10:25:29 AM PDT

by

grey_whiskers

(The opinions are solely those of the author and are subject to change without notice.)

To: grey_whiskers

That would be a valid criticism if the hyperscalers were building data centers on speculation—but they’re not. They’re building because demand already exists.

Every one of the major builders—Microsoft, Google, Amazon, Meta, Oracle, CoreWeave—is expanding capacity in response to contracted or strongly forecasted workloads from partners like OpenAI, Anthropic, Palantir, and dozens of enterprise adopters. GPU lead times are still six to twelve months; Nvidia, AMD, and Broadcom can’t make chips fast enough. That’s not a bubble symptom — that’s unmet demand.

The current imbalance between CapEx and revenue just reflects timing. It takes years to build the physical grid, networking, and cooling before those racks can start generating service revenue. The same pattern played out with railroads, electricity, and cloud computing. The infrastructure looks “overspent” at first, then becomes indispensable once utilization catches up.

In other words, the money isn’t being wasted—it’s being front-loaded to meet demand that’s already visible.

9

posted on

10/15/2025 10:36:43 AM PDT

by

RoosterRedux

(If the truth offends, then the offense lies not in the truth—but in the falsehood it exposes.)

To: Vermont Lt

Most people have no real understanding of what AI is. It’s a tool that can multiply the performance of the person who uses it. It’s very useful for some things and useless for other things.

It won’t replace people but people may get replaced. Instead of 20 people performing a function, 5 or 10 people may perform that same function, so less people will be needed to do something.

To: CIB-173RDABN

This post reminds me of a comment made by a man I worked for briefly in the mid-90s.

His business was entirely supporting computer networks using Novell. I told him he needed to be online.

He said, “I think this Internet thing is just a fad.”

11

posted on

10/15/2025 12:16:55 PM PDT

by

unlearner

(I'm tired of being not tired of winning.)

To: CIB-173RDABN

AI seems to be a mix of factors.

1) AI often fails to find and use objective facts. The example I often use is City. Data bases from the USPS, Census Bureau, Rand McNally, etc offer factual data on the county and state is which a city exists. AI fails to use this resulting in factual errors. City is just an example. AI makes these errors across many subject areas. This can be fixed. But so far there seems to be little interest in fixing it.

2) AI acceots opinionated sources as fact. Example: AI accepts SPLC labels of “racist”, “sexist” as fact. Fox News, CBS, NBC, ABC, NY Times, CNN, etal are accepted by AI as if their opinion is fact. AI often does not recognize between a data source that is fact vs fiction. AI often does not recognize figures of speech such as hyperbole, sarcasm, etc

3) AI sources are often partial. Some sources are cheap to access, Some require paying to use the copyright. Some are just not on the popular radar at this time.

4) AI errors are a percentage game. Junk mail can be useful, even with a high error rate. Evidence in a murder trial has a much higher threshold. AI is useful in some areas where a high error rate is just a cost of doing business. AI is an imperfect tool in the tool bag, at best, where a high error rate should not be allowed.

5)_Few who are pushing AI seem concerned about accuracy of data. Have they never heard of garbage-in-garbage-out?

To: RoosterRedux

It’s hype.

Other companies don’t have $400 billion + to spend.

Groups such as Stanford, Harvard, MIT, and even Morgan Stanley are calling this a bubble.

13

posted on

10/15/2025 12:22:50 PM PDT

by

grey_whiskers

(The opinions are solely those of the author and are subject to change without notice.)

To: grey_whiskers

Groups such as Stanford, Harvard, MIT, and even Morgan Stanley are calling this a bubble. If you’re saying Stanford, Harvard, MIT, and Morgan Stanley have taken official positions calling the AI build-out a bubble, that would be extraordinary. Please link to those institutional statements.

Because as far as I can tell, those same organizations are investing billions in AI labs, GPU clusters, and research partnerships. Morgan Stanley’s own research desk projects that generative AI will drive roughly $1.1 trillion in global revenue by 2028, up from about $45 billion in 2024. That doesn’t sound like a firm that believes it’s a bubble. In fact, they wrote:

“Revenue from generative AI is likely to increase more than 20-fold over the next three years, with software and internet companies expected to see a positive return on their AI investments as soon as this year, as the expanding functionality of GenAI prompts broader use and triggers a new technology cycle.”

And this:

“Now that we've had GenAI products in the marketplace for more than a year and they've proved their efficacy, the market is going to be ready to start adopting these solutions,” says Keith Weiss, who leads Morgan Stanley’s U.S. Software Research. “That's when revenue starts to flow to the software companies that are trying to automate business processes.” — Morgan Stanley

Meanwhile, Stanford is running its own supercluster with Nvidia, and MIT and Harvard are building AI compute centers.

So which is it? Are these institutions calling AI a bubble — or are they building into it? Let’s see the sources.

14

posted on

10/15/2025 12:58:29 PM PDT

by

RoosterRedux

(If the truth offends, then the offense lies not in the truth—but in the falsehood it exposes.)

To: CIB-173RDABN

15

posted on

10/15/2025 1:28:20 PM PDT

by

grey_whiskers

(The opinions are solely those of the author and are subject to change without notice.)

To: RoosterRedux

I haven’t kept the links, but they were floating around various sites such as market-tickdr.org and zerohedge.com in the last few weeks.

16

posted on

10/15/2025 1:35:15 PM PDT

by

grey_whiskers

(The opinions are solely those of the author and are subject to change without notice.)

To: grey_whiskers

Be interesting for me to see how AI will be useful in running an auto dealership.

17

posted on

10/15/2025 1:51:50 PM PDT

by

oldtech

To: AdmSmith; AnonymousConservative; Arthur Wildfire! March; Berosus; Bockscar; BraveMan; cardinal4; ...

18

posted on

10/15/2025 2:47:59 PM PDT

by

SunkenCiv

(NeverTrumpin' -- it's not just for DNC shills anymore -- oh, wait, yeah it is.)

19

posted on

10/15/2025 2:48:52 PM PDT

by

SunkenCiv

(NeverTrumpin' -- it's not just for DNC shills anymore -- oh, wait, yeah it is.)

To: SunkenCiv

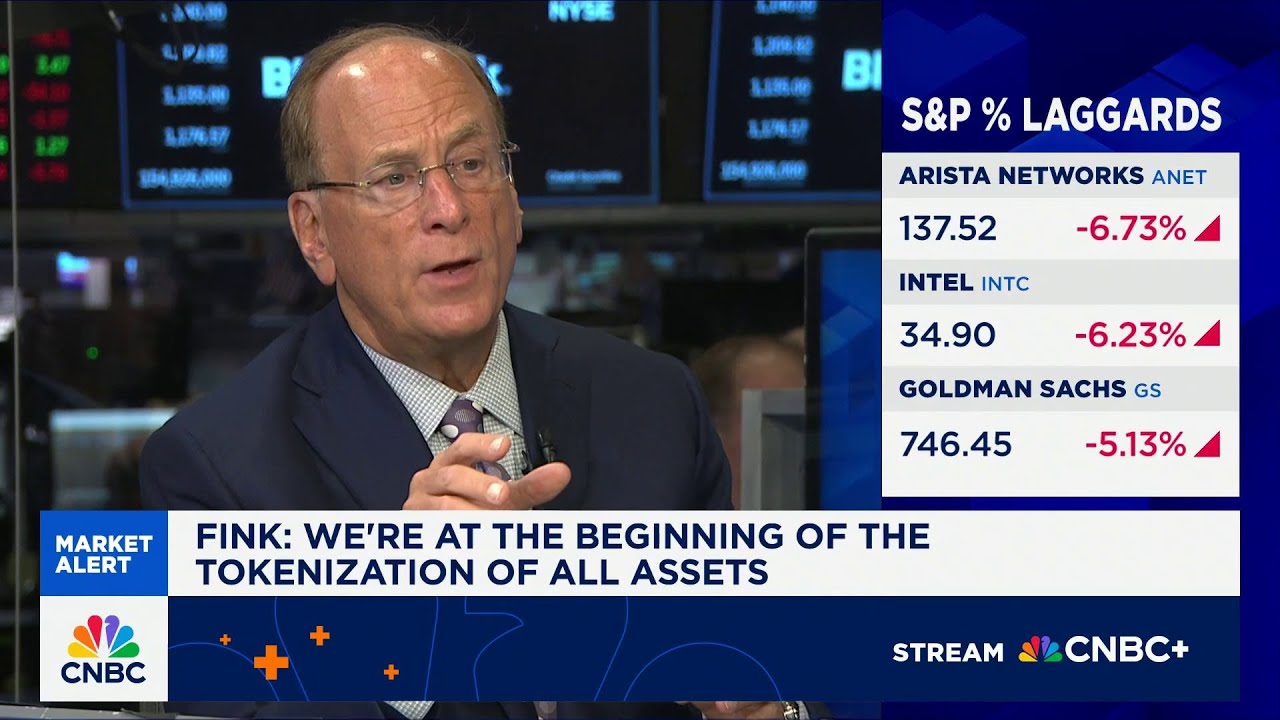

When Fink says, "We're at the beginning of the tokenization of all assets," I think he is trying to sound visionary and profound. And he isn't a visionary or profound.

He has been a Woke fool for years now and has been abusing his fiduciary responsibility.

He has no vision about AI. He is rich because he participated in the creation of a bunch of index ETFs.

20

posted on

10/15/2025 4:32:13 PM PDT

by

RoosterRedux

(If the truth offends, then the offense lies not in the truth—but in the falsehood it exposes.)

Navigation: use the links below to view more comments.

first 1-20, 21-30 next last

Disclaimer:

Opinions posted on Free Republic are those of the individual

posters and do not necessarily represent the opinion of Free Republic or its

management. All materials posted herein are protected by copyright law and the

exemption for fair use of copyrighted works.

FreeRepublic.com is powered by software copyright 2000-2008 John Robinson