1. A robot may not injure a human being or, through inaction, allow a human being to come to harm.

2. A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

3. A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

"Robots" killing humans are not robots but autonomous weapon systems.

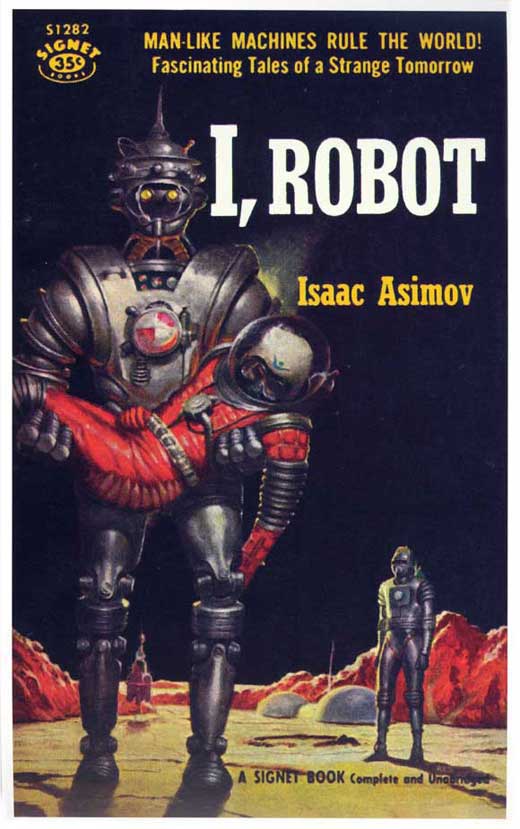

I know, many will ask, who the hell is Asimov?

Hard to believe he started all that thought process (or at least first shared it with the public) clear back in 1939. Think of the level of technology he had available to him to pull together those ideas.

http://www.asimovonline.com/oldsite/Robot_Foundation_history_1.html

That part has all sorts of potential unintended consequences ... as the robots try to prevent us from harming each other or ourselves.