Related threads:

Richard Lindzen: AGW movement driven by money, power and dubious science

Do We Really Know Earth’s Temperature?

*******************************EXCERPT*******************************************

Errors in IPCC climate science at warwickhughes.com ^ | January 14th, 2011 | Warwick Hughes

Guest article by Pat Frank

We’ve all read the diagnosis, for example here, that the global climate has suffered “unprecedented warming,” since about 1900. The accepted increase across the 20th century is 0.7 (+/-)0.2 C. As an experimental chemist, I always wondered at that “(+/-)0.2 C.” In my experience, it seemed an awfully narrow uncertainty, given the exigencies of instruments and outdoor measurements.

When I read the literature, going right back to such basics as Phil Jones’ early papers [1, 2], I found no mention of instrumental uncertainty in their discussions of sources of error.

The same is true in Jim Hansen’s papers, e.g. [3]. It was as though the instrumental readings themselves were canonical, and the only uncertainties were in inhomogeneities arising from such things as station moves, instrumental changes, change in time of observation, and so on.

But Phil Brohan’s paper in 2006 [4], on which Phil Jones was a co-author, discussed error analysis more thoroughly than previously done. Warwick has posted, and here on the change that occurred in 2005, when the UK Met Office took over for the Climate Research Unit of the UEA, in compiling the global average temperature record. So, maybe Phil Brohan decided to be more forthcoming about their error models.

The error analysis in Brohan, 2006, revealed that they’d been taking a signal averaging approach to instrumental error. The assumption was that all the instrumental error was random, independent, identically distributed (iid) error. This kind of error averages out to zero when large numbers of measurements are averaged together.

To make the long story short, it turned out that no one had ever surveyed the temperature sensors of climate stations to see whether the assumption of uniformly iid measurement errors could be empirically validated.

That led to my study, and the study led to the paper that is just out in Energy and Environment [5]. Here’s the title and the abstract:

Title: “Uncertainty in the Global Average Surface Air Temperature Index: A Representative Lower Limit”

Abstract: “Sensor measurement uncertainty has never been fully considered in prior appraisals of global average surface air temperature. The estimated average (+/-)0.2 C station error has been incorrectly assessed as random, and the systematic error from

uncontrolled variables has been invariably neglected. The systematic errors in measurements from three ideally sited and maintained temperature sensors are calculated herein. Combined with the (+/-)0.2 C average station error, a representative lower-limit uncertainty of (+/-)0.46 C was found for any global annual surface air temperature anomaly. This (+/-)0.46 C reveals that the global surface air temperature anomaly trend from 1880 through 2000 is statistically

indistinguishable from 0 C, and represents a lower limit of calibration uncertainty for climate models and for any prospective physically justifiable proxy reconstruction of paleo-temperature. The rate and magnitude of 20th century warming are thus unknowable, and suggestions of an unprecedented trend in 20th century global air temperature are unsustainable.”

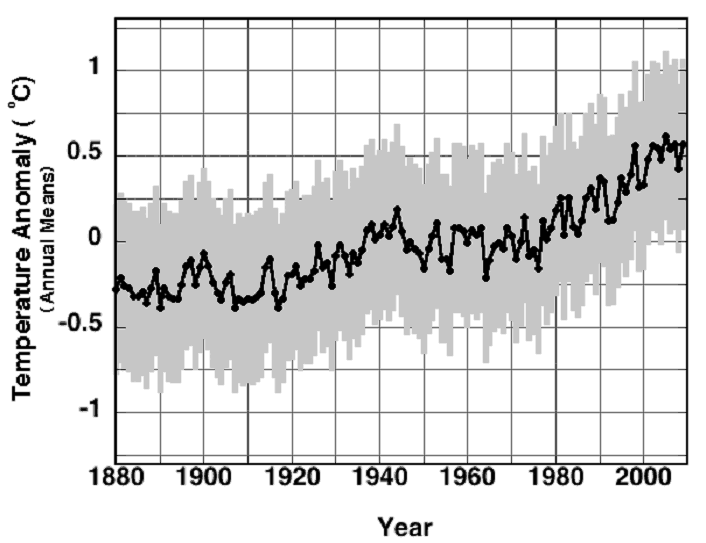

Here’s the upshot of the study in graphical form; Figure 3 from the paper showing the 20th century average surface air temperature trend, with the lower limit of instrumental uncertainty as grey error bars.

Figure Legend: (•), the global surface air temperature anomaly series through 2009, as updated on 18 February 2010, (data.giss.nasa.gov/gistemp/graphs/). The grey error bars show the annual anomaly lower-limit uncertainty of (+/-)0.46 C.

The lower limit of error was based in part on the systematic error displayed by the Minimum-Maximum Temperature System under ideal site conditions. I chose the MMTS because that sensor is the replacement instrument of choice brought into the USHCN since about 1990.

This lower limit of instrumental uncertainty implies that Earth’s fever is indistinguishable from zero Celsius, at the 1σ level, across the entire 20th century.