Posted on 04/22/2007 9:42:01 PM PDT by 2ndDivisionVet

WAR is expensive and it is bloody. That is why America’s Department of Defence wants to replace a third of its armed vehicles and weaponry with robots by 2015. Such a change would save money, as robots are usually cheaper to replace than people. As important for the generals, it would make waging war less prey to the politics of body bags. Nobody mourns a robot.

The Pentagon already routinely uses robotic aeroplanes known as unmanned aerial vehicles (UAVs). In November 2001 two missiles fired from a remote-controlled Predator UAV killed Muhammad Atef, al-Qaeda’s chief of military operations and one of Osama bin Laden’s most important associates, as he drove his car near Kabul, Afghanistan's capital.

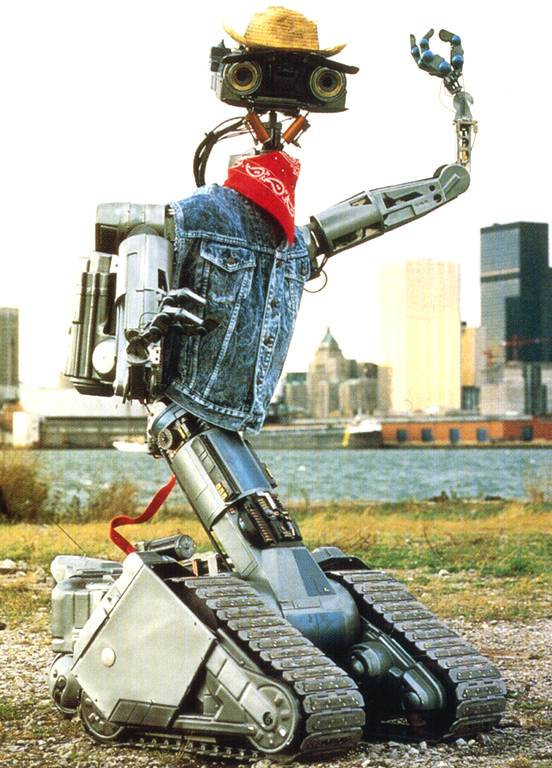

But whereas UAVs and their ground-based equivalents, such as the machinegun toting robot Swords, are usually controlled by remote human operators, the Pentagon would like to give these new robots increasing amounts of autonomy, including the ability to decide when to use lethal force.

To achieve this, Ronald Arkin of the Georgia Institute of Technology, in Atlanta, is developing a set of rules of engagement for battlefield robots to ensure that their use of lethal force follows the rules of ethics. In other words, he is trying to create an artificial conscience. Dr Arkin believes that there is another reason for putting robots into battle. It is that they have the potential to act more humanely than people. Stress does not affect a robot's judgement in the way it affects a soldier's. His approach is to create what he calls a “multidimensional mathematical decision space of possible behaviour actions”. Based on inputs that could come from anything from radar data and current position to mission status and intelligence feeds, the system would divide the set of all possible actions into those that are ethical and those that are not. If, say, the drone from which the fatal attack on Mr Atef was launched had sensed that his car was overtaking a school bus, it may have held fire.

There are comparisons to be made between Dr Arkin’s work and the famous laws of robotics drawn up by Isaac Asimov, a science-fiction writer, to govern robot behaviour. But whereas Asimov’s laws were intended to prevent robots from harming people in any circumstances, Dr Arkin’s are supposed to ensure only that they are not unethically killed.

Although a completely rational robot may be unfazed by the chaos and confusion of the battlefield it may make mistakes all the same. Surveillance and intelligence data can be wrong and conditions and situations on the battlefield can change. But this is as much a problem for people as it is for robots.

There is also the question of whether the use of such robots would lead to wars breaking out more easily. Dr Arkin has started to survey policy makers, the public, researchers and military personnel to gauge their views on the use of lethal force by autonomous robots.

Creating a robot with a conscience may give the military more than it bargained for. To some degree, it gives the robot the right to refuse an order.

It would be cool if we have secretly developed an army of robots and are about to release them on Iran like the Manhattan project.

Nah...not my type. He’s got zits. I hope that doesn’t offend him in an ethical sort of way.

Will it take an oath to the Constitution?

Sure, if he can be sworn in with his hand on a mechanical engineering text.

Teen to robot: "He's got zits!..He's got zi(BAM!!!!)

Robot: "Target eliminated for violation of hate speech code 3007, robotophobia."

Robots also aren’t restricted by the Geneva convention. I think Torboto is the answer.

http://www.youtube.com/watch?v=9KH6Oxb1Q5k

There's also a movie where a Security Robot with spinning circular saws goes ape and much killing. Tearing through walls and the like. Anyone know the name of that movie so we can find a pic of the robot somewhere?

ping.

Robots are more expendable than human beings. That should be more of a selling point.

Yeah, give robots autonomy but require that highly-trained, elite troops in the field hold their fire until some committee of lawyers at the Pentagon gives the order to fire. Brilliant.

The potential impartiality is a crux of the issue with advanced robots. It would be very tempting to put robots in places of power: such as political leaders; soldiers (as in this case); policemen; and judges where their human counterparts could be tainted by corruption, prejudice, greed, ego, etc. But then if so much power is relinquished to robots, they could more easily overthrow humanity.

there is a catch 22 here

As long as we don’t want robots to overthrow humans, they are limited to making simple office works, without free will they won’t be able to make decisions in more complex cases... if we provide them with free will somehow (like delete 3 laws of asimov from they coordinates) we are putting the noose around our necks.

Why not? It happens in science fiction all the time.

Disclaimer: Opinions posted on Free Republic are those of the individual posters and do not necessarily represent the opinion of Free Republic or its management. All materials posted herein are protected by copyright law and the exemption for fair use of copyrighted works.